Posts tagged ‘block-based languages’

A Scaffolded Approach into Programming for Arts and Humanities Majors: ITiCSE 2023 Tips and Techniques Papers

I am presenting two “Tips and Techniques” papers at the ITiCSE 2023 conference in Turku, Finland on Tuesday July 11th. The papers are presenting the same scaffolded sequence of programming languages and activities, just in two different contexts. The complete slide deck in Powerpoint is here. (There’s a lot more in there than just the two talks, so it’s over 100 Mb.)

When I met with my advisors on our new PCAS courses (see previous blog post), one of the overarching messages was “Don’t scare them off!” Faculty told me that some of my arts and humanities students will be put off by mathematics and may have had negative experiences with (or perceptions of) programming. I was warned to start gently. I developed this pattern as a way of easing into programming, while showing the connections throughout.

The pattern is:

- Introduce computer representations, algorithms, and terms using a teaspoon language. We spend less than 10 minutes introducing the language, and 30-40 minutes total of class time (including student in-class activities). It’s about getting started at low-cost (in time and effort).

- Move to Snap! with custom blocks explicitly designed to be similar to the teaspoon language. We design the blocks to promote transfer, so that the language is similar (surface level terms) and the notional machine is similar. Students do homework assignments in Snap!.

- At the end of the unit, students use a Runestone ebook, with a chapter for each unit. The ebook chapter has (1) a Snap! program seen in class, (2) a Python or Processing program which does the same thing, and (3) multiple choice questions about the text program. These questions were inspired by discussions with Ethel Tshukudu and Felienne Hermans last summer at Dagstuhl where they gave me advice on how to promote transfer — I’m grateful for their expertise.

I always teach with peer instruction now (because of the many arguments for it), so steps 1 and 2 have lots of questions and activities for students throughout. These are in the talk slides.

Digital Image Filters

The first paper is “Scaffolding to Support Liberal Arts Students Learning to Program on Photographs” (submitted version of paper here). We use this unit in this course: COMPFOR 121: Computing for Creative Expression.

Step 1: The teaspoon language is Pixel Equations which I blogged about here. You can run it here.

Students choose an image to manipulate as input, then specify their image filter by (a) writing a logical expression describing the pixels that they want to manipulate and (b) writing equations for how to compute the red, green, and blue channels for those pixels. Values for each channel are 0 to 255, and we talk about single byte values per channel. The equation for specifying the channel change can also reference the previous values of the channels, using the variables red, green, blue, rojo, verde, or azul.

Step 2:The latest version of the pixel microworld for Snap is available here. Click See Code to see all the examples — I leave lots of worked examples in the projects, as a starting point for homework and other projects.

Here’s what negation looks like:

Here’s an example of replacing a green background with the Alice character so that Alice is standing in front of a waterfall.

The homework assignment here involves creating their own image filters, then generate a collage of their own images (photos or drawn) in their original form and filtered.

Step 3:The Runestone ebook chapter on pixels is here.

Questions after the Python code include “Why do we have the for loop above?” And “What would happen if we changed all the 255 values to 120? (Yes, it’s totally fair to actually try it.)”

Recognizing and Generating Human Language

The second paper is “Scaffolding to Support Humanities Students Programming in a Human Language Context” (submitted version here). I originally developed this unit for this course COMPFOR 111: Computing’s Impact on Justice: From Text to the Web because we use chatbots early on in the course. But then, I added chatbots as an expressive medium to the Expression course, and we use parts of this unit in that course, too.

Step 1: I created a little teaspoon language for sentence recognition and generation — first time that I’ve created a teaspoon language with me as the teacher, because I needed one for my course context. The language is available here (you switch between recognition and generation from a link in the upper left corner).

The program here is a sentence model. It can use five words: noun, verb, adverb, adjective, and article. Above the sentence model is the dictionary or lexicon. Sentence generation creates 10 random sentence from the model. Sentence recognition also takes an input sentence, then tries to match the elements in the model to the input sentence. I explain the recognition behavior like this:

This is very simple, but it’s enough to create opportunities to debug and question how things work.

- I give students sentences and models to try. Why is “The lazy dog runs to the student quickly” recognized as “noun verb noun” but “The lazy dog runs to the house quickly” not recognized? Because “house” isn’t in the original lexicon. As students add words to the lexicon, we can talk about program behavior being driven by both algorithm and data (which sets us up for talking about the importance of training data when creating ML systems later).

- I give them sentences in different English dialects and ask them to explore how to make models and lexicons that can match all the different forms.

- For generation, I ask them: Which leads to better generated sentences? Smaller models (”noun verb”) or larger models (“article adjective noun verb adverb”)? Does adding more words to the lexicon? Or tuning the words that are in the lexicon?

Step 2: Tamara Nelson-Fromm built the first set of blocks for language recognition and generation, and I’ve added to them since. These include blocks for language recognition.

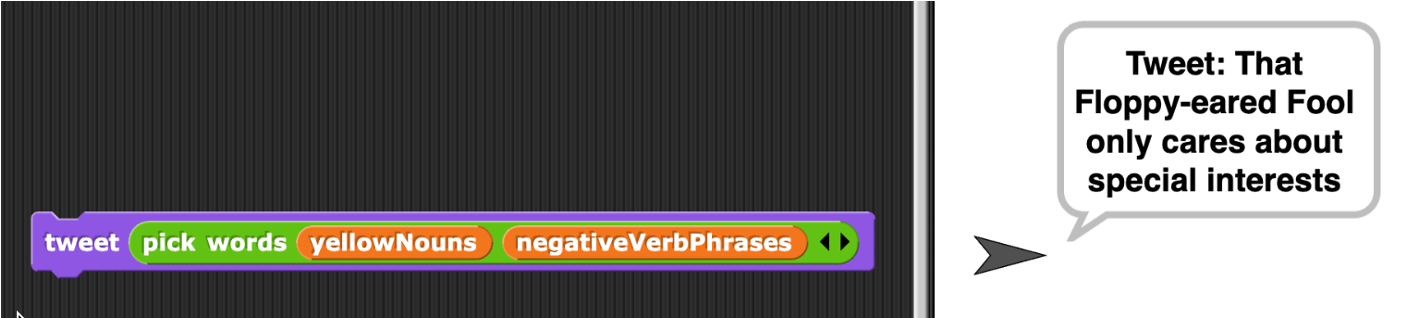

And language generation.

The examples in this section are fun. We create politically biased bots who tweet something negative about one party, listen for responses about their own party, then say something positive in retort about their party.

We create scripts that generate Dr. Seuss like rhymes.

The homework in this section is to generate haiku.

Step 3: The Runestone ebook chapter for this unit is here. The chapter starts out with the sentence generator, and then Snap! blocks that do the same thing, and then two different Python programs that do the same thing. We ask questions like “Which of the following is a sentence that could NOT be produced from the code above?” And “Let’s say that you want to make it possible for to generate ‘A curious boat floats.’ Which of the lines below do you NOT have to change?”

Where might this pattern be useful?

We don’t use this whole three-step pattern for every unit in these classes. We do something similar for chatbots, but that’s really it. Teaspoon languages in these classes are about getting started, to get past the “I like computers. I hate coding” stage (as described by Paulina Haduong in a paper I cite often). We use the latter two steps in the pattern more often — each class has an ebook with four or five chapters. The Snap to Python steps are about increasing the authenticity for the block-based programming and developing confidence that students can transfer their knowledge.

I developed this pattern to give non-STEM (arts and humanities) students a gradual, scaffolded approach to program, but it could be useful in other contexts:

- We originally developed teaspoon languages for integrating computing into other subjects. The first two steps in this process might be useful in non-CS classes to create a path into Snap programming.

- The latter two steps might be useful to promote transfer from block-based into textual programming.

Programming in blocks lets far more people code — but not like software engineers: Response to the Ofsted Report

A May 2022 report from the UK government Research Review Series: Computing makes some strong claims about block-based programming that I think are misleading. The report is summarizing studies from the computing education research literature. Here’s the paragraph that I’m critiquing:

Block-based programming languages can be useful in teaching programming, as they reduce the need to memorise syntax and are easier to use. However, these languages can encourage pupils to develop certain programming habits that are not always helpful. For example, small-scale research from 2011 highlighted 2 habits that ‘are at odds with the accepted practice of computer science’ (footnote). The first is that these languages encourage a bottom-up approach to programming, which focuses on the blocks of the language and not wider algorithm design. The second is that they may lead to a fine-grained approach to programming that does not use accepted programming constructs; for example, pupils avoiding ‘the use of the most important structures: conditional execution and bounded loops’. This is problematic for pupils in the early stages of learning to program, as they may carry these habits across to other programming languages.

I completely agree with the first sentence — there are benefits to using block-based programming in terms of reducing the need to memorize syntax and increasing usability. There is also evidence that secondary school students learn computing better in block-based programming than in text-based programming (see blog post). Blanchard, Gardner-McCune, and Anthony found (a Best Paper awardee from SIGCSE 2020) that university students learned better when they used both blocks and text than when they used blocks alone.

The two critiques of block-based programming in the paragraph are:

- “These languages encourage a bottom-up approach to programming, which focuses on the blocks of the language and not wider algorithm design.”

- “They may lead to a fine-grained approach to programming that does not use accepted programming constructs…conditional execution and bounded loops.”

Key Point #1: Block-based programming doesn’t cause either of those critiques. What about programming with blocks rather than text could cause either of these to be true?

I’m programming a lot in Snap! these days for two new introductory computing courses I’m developing at the University of Michigan. I’ve been enjoying the experience. I don’t think that either of these critiques are true about my code or that of the students helping me develop the courses. I regularly do top-down programming where I define high-level custom blocks, as I design my program overall. Not only do I use conditional execution and bounded loops regularly, but Snap allows me to create new kinds of control structures, which has been a terrific help as I create block-based versions of our Teaspoon languages. My experience is only evidence that those two statements need not be true, just because the language is block-based.

I completely believe that the studies being cited in this research report saw and accurately describe exactly these points — that students worked bottom-up and that they rarely used conditioned execution and bounded loops. I’m not questioning the studies. I’m questioning the inference. I don’t believe at all that those are caused by block-based languages.

Key Point #2: Block-Based Programming is Scaffolding, but not Instant Expertise. For those not familiar, here are two education research terms that will be useful in making my argument.

- Scaffolding is the support provided by a learner to enable them to achieve some task or process which they might not be able to achieve without that support. A kid can’t hop a fence by themselves, but they can with a boost — that’s a kind of scaffolding. Block-based programming languages are a kind of scaffolding (and here’s a nice paper from Weintrop and Wilensky describing how it is scaffolding — thanks to Ben Shapiro for pointing it out).

- The Zone of Proximal Development (ZPD) describes the difference between what a student can do on their own (one edge of the ZPD) and what they might be able to do with the support of a teacher or scaffolding (the far edge of the ZPD). Maybe you can’t code a linked list traversal on your own, but if I give you the pseudocode or give you a lecture on how to do it, then you can. But the far edge of ZPD is unlikely to be that you’re a data structure expert.

Let’s call the task that students were facing in the studies reviewed in the report: “Building a program using good design and with conditioned execution.” If we asked students to achieve this task in a text-based language, we would be asking them to perform the task without scaffolding. Here’s what I would expect:

- Fewer students would complete the task. Not everyone can achieve the goal without scaffolding.

- Those students who do complete the task likely already have a strong background in math or computing. They are probably more likely to use good design and to use conditioned execution. The average performance in the text-based condition would be higher than in the block-based condition — simply because you’ve filtered out everyone who doesn’t have the prior background..

Fewer people succeed. More people drop-out. Pretty common CS Ed result. If you just compare performance text vs. blocks, text looks better. For a full picture, you also have to look at who got left out.

So let’s go back to the actual studies. Why didn’t we see good design in students’ block-based programs? Because the far edge of the ZPD is not necessarily expert practice. Without scaffolding (block-based programming languages), many students are not able to succeed at all. Giving them the scaffolding doesn’t make them experts. The scaffolding can take them as far as the ZPD allows. It may take more learning experiences before we can get to good design and conditioned execution — if that even makes sense.

Key Point #3: Good software engineering practice is the wrong goal. Is “building a program using good design and with conditioned execution” really the task that students were engaging in? Is that what we want student to succeed at? Not everyone who learns to program is going to be a software engineer. (See the work I cite often on “alternative endpoints.”) Using good software engineering practices as the measure of success doesn’t make sense, as Ben Shapiro wrote about these kinds of studies several years ago on Twitter (see his commentary here, shared with his permission). A much more diverse audience of students are using block-based programming than ever used text-based programming. They are going to solve different problems for different purposes in different ways (a point I made in this blog post several years ago). Few US teachers in K-12 are taught how to teach good software engineering practice — that’s simply not their goal (a point that Aman Yadav made to me when discussing this post). We know from many empirical studies that most Scratch programs are telling a story. Why would you need algorithmic design and conditioned execution for that task? They’re not doing complicated coding, but the little bit of coding that they’re using is powerful and is engaging for students — and relatively few students are getting that. I’m far more concerned about the inequitable access to computing education than I am about whether students are becoming good software engineers.

Summary: It’s inaccurate to suggest that block-based programming causes bad programming habits. Block-based programming makes programming far more accessible than it ever has been before. Of course, we’re not going to see expert practice as used in text-based languages for traditional tasks. These are diverse novices using a different kind of notation for novel tasks. Let’s encourage the learning and engagement appropriate for each student.

Summarizing findings about block-based programming in computing education

As readers of my blog know, I’m interested in alternative modalities and representations for programming. I’m an avid follower of David Weintrop’s work, especially the work comparing blocks and text for programming (e.g., as discussed in this blog post).

David wrote a piece for CACM summarizing some of his studies on block-based programming in computing education. It has just been published in the August issue. Here’s the link to the piece — I recommend it.

To understand how learners make sense of the block-based modality and understand the scaffolds that novice programmers find useful, I conducted a series of studies in high-school computer science classrooms. As part of this work, I observed novices writing programs in block-based tools and interviewed them about the experience. Through these interviews and a series of surveys, a picture emerged of what the learners themselves identified as being useful about the block-based approach to programming. Students cited features discussed here such as the shape and visual layout of blocks, the ability to browse available commands, and the ease of the drag-and-drop composition interaction. They also cited the language of the blocks themselves, with one student saying “Java is not in English it’s in Java language, and the blocks are in English, it’s easier to understand.” I also surveyed students after working in both block-based and text-based programming environment and they overwhelmingly reported block-based tools as being easier. These findings show that students themselves see block-based tools as useful and shed light as to why this is the case.

An Ebook Integrating Minimal Manuals with Constructionism, Worked Examples, and Inquiry: MOHQ

Our computing education research group at Georgia Tech has been developing and evaluating ebooks for several years (see this post with discussion of some of them). We publish on them frequently, with a new paper just accepted to ICER 2016 in Melbourne. We use the Runestone Interactive platform which allows us to create ebooks with a lot of different kinds of learning activities — not just editing and running code (which I’ve been arguing for awhile is really important to support a range of abilities and motivations), but including editing and running code.

It’s a heavyweight platform. I have been thinking about alternative models of ebooks — maybe closer to e-pamphlets. Since I was working with GP (see previous post) and undergraduate David Tran was interested in working with me on a GP project, we built a prototype of a minimalist medium for learning CS. I call it a MOHQ: Minimal manual Organized around Hypertext Questions: http://home.cc.gatech.edu/gpblocks. (Suggestion: Use Firefox if you can for playing with browser GP. WAY faster for the JavaScript execution than either Chrome or Safari on my Mac.)

Minimal Manuals

John Carroll came up with the idea of minimal manuals back in the 1980’s (see the earliest paper I found on the idea). The goal is to help people to use complicated computing devices with the minimum of overhead. Each page of the manual starts with a task — something that a user would want to do. The goal is to put the instruction for how to achieve that task all on that one page.

The idea of minimalist instruction is described here: http://www.instructionaldesign.org/theories/minimalism.html.

The four principles of minimal instruction design are:

- Allow learners to start immediately on meaningful tasks.

- Minimize the amount of reading and other passive forms of training by allowing users to fill in the gaps themselves

- Include error recognition and recovery activities in the instruction

- Make all learning activities self-contained and independent of sequence.

There’s good evidence that minimal manuals really do work (see http://doc.utwente.nl/26430/1/Lazonder93minimal.pdf). Learners become more productive more quickly with minimal manuals, with surprisingly high scores on transfer and retention. A nice attribute of minimal manuals is that they’re geared toward success. They likely increase self-efficacy, a significant problem in CS education.

The goal of most minimal instruction is to be able to do something. What about learning conceptual knowledge?

Adding Learning Theory: Inquiry, Worked Examples, and Constructionism

I started exploring minimal manuals as a model for designing CS educational media after a challenge from Alan Kay. Alan asked me to think about how we would teach people to be autodidacts. One of the approaches used to encourage autodidactism is inquiry-based learning. Could we structure a minimal manual around questions that they might have or that we want students to ask themselves?

We structure our Runestone ebooks around an Examples+Practice framework. We provide a worked example (typically executable code, but sometimes a program visualization), and then ask (practice) questions about that example. We provide one or two practice exercises for every example. Based on Lauren Margeliux’s work, the point of the practice is to get students to think about the example, to engage with it, and to explain it to themselves. It’s less important that they do the questions — I want the students to read the questions and think about them, and Lauren’s work suggests that even the feedback may not be all that important.

Finally, one of the aspects that I like about Runestone is that every example in an active code area is a complete Python interpreter. Modify the code anyway you want. Erase all of it and build something new if you want. It’s constructionist. We want students to construct with the examples and go beyond them.

MOHQ: Minimal Manual Organized around Hypertext Questions

The prototype MOHQ that David Tran and I built (http://home.cc.gatech.edu/gpblocks) is an implementation of this integration of minimal manuals with constructionism, inquiry, and worked examples. Each page in the MOHQ:

- Starts with a question that a student might be wondering about.

- Offers a worked example in a video.

- Offer the opportunity to construct with the example project.

- Asks one or two practice questions, to prompt thinking about the project.

Using the minimal design principles to structure the explanation:

- Allow learners to start immediately on meaningful tasks.

The top page offers several questions that I hope are interesting to a student. Every page offers a project that aims to answer that question. GP is a good choice here because it’s blocks-based (low cognitive load) and I can do MediaComp in it (which is what I wanted to teach in this prototype).

#1: Minimize the amount of reading and other passive forms of training by allowing users to fill in the gaps themselves.

Each page has a video of David or me solving the problem in GP. Immediately afterward is a link to jump directly into the GP project exactly where the video ended. Undo something, redo something, start over and build something else. The point is to watch a video (where we try to explain what we’re doing, but we’re certainly not filling in all the gaps), then figure out how it works on your own.

Then we offer a couple of practice questions to challenge the learner: Did you really understand what was going on here?

#2: Include error recognition and recovery activities in the instruction.

Error recovery is easy when everything is in the browser — just hit the back button. You can’t save. You can’t damage anything. (We tell people this explicitly on every page.)

#3: Make all learning activities self-contained and independent of sequence.

This is the tough one. I want people to actually learn something in a MOHQ, that pixels have red, green, and blue components, and chromakey is about replacing one color with a background image, and that removing every other sample increases the frequency of a sound — and more general ideas, e.g., that elements in a collection can be referenced by index number.

So, all the driving questions from the home page start with, “Okay, you can just dive in here, but you might want to first go check out these other pages.” You don’t have to, but if you want to understand better what’s going on here, you might want to start with simpler questions.

We also want students to go on — to ask themselves new questions, to go try other projects. After each project, we offer some new questions that we hope that students might ask themselves. The links are explicitly prompts. “You might be thinking about these questions. Even if you weren’t, you might want to. Let’s see where we can explore next.”

Current Prototype and What Comes Next

Here’s the map of pages that we have out there right now. We built it in a Wiki which facilitated creating the network of pages that we want. This isn’t a linear book.

There’s maybe a dozen pages out there, but even with that relatively small size, it took most of a semester to pull these together. Producing the videos and building these pages by hand (even in a Wiki) was a lot of work. The tough part was every time we changed our minds about something — and had to go back through all of the previously built pages and update them. Since this is a prototype (i.e., we didn’t know what we wanted when we started), that happened quite often. If we were going to add more to the GP MOHQ, I’d want to use a tool for generating pages from a database as we did with STABLE, the Smalltalk Apprenticeship-Based Learning Environment.

I would appreciate your thoughts about MOHQ. Call this an expert review of the idea.

- Thumbs-up or down? Worth developing further, or a bad direction?

- What do you think is promising about this idea?

- What would we need to change to make it more effective for student learning?

Blocks and Beyond Workshop at VL/HCC: Lessons and Directions for First Programming Environments

Thursday, October 22, 2015, Atlanta, GA

A satellite workshop of the 2015 IEEE Symposium Visual Languages and Human-Centric Computing (VL/HCC) https://sites.google.com/site/vlhcc2015

Scope and Goals

Blocks programming environments represent program syntax trees as compositions of visual blocks. This family of tools includes Scratch, Code.org’s Blockly lessons, App Inventor, Snap!, Pencil Code, Looking Glass, etc. They have introduced programming and computational thinking to tens of millions, reaching people of all ages and backgrounds.

Despite their popularity, there has been remarkably little research on the usability, effectiveness, and generalizability of affordances of these environments. The goal of this workshop is to begin to distill testable hypotheses from the existing folk knowledge of blocks environments and identify research questions and partnerships that can legitimize, or discount, pieces of this knowledge. It will bring together educators and researchers who work with blocks languages and members of the broader VL/HCC community interested in this area. We seek participants with diverse expertise, including, but not limited to: design of programming environments, instruction with these environments, the learning sciences, data analytics, usability, and more.

The workshop will be a generative discussion that sets the stage for future work and collaboration. It will include participant presentations and demonstrations that frame the discussion, followed by reflection on the state of the field and smaller working-group discussion and brainstorming sessions.

Suggested Topics for Discussion

- Who uses blocks programming environments and why?

- Which features of blocks environments help or hinder users? How do we know? Which of these features are worth incorporating into more traditional IDEs? What helpful features are missing?

- How can blocks environments and associated curricular materials be made more accessible to everyone, especially those with disabilities?

- Can blocks programming appeal to a wider range of interests (e.g., by allowing connections to different types of devices, web services, data sources, etc.)?

- What are the best ways to introduce programming to novices and to support their progression towards mastery? Do these approaches differ for for learners of computing basics and for makers?

- What are the conceptual and practical hurdles encountered by novice users of blocks languages when they face the transition to text languages and traditional programming communities? What can be done to reduce these hurdles?

- How can we best harness online communities to support growth through teaching, motivating, and providing inspiration and feedback?

- What roles should collaboration play in blocks programming? How can environments support that collaboration?

- In these environments, what data can be collected, and how can that data be analyzed to determine answers to questions like those above? How can we use data to answer larger scale questions about early experiences with programming?

- What are the lessons learned (both positive and negative) from creating first programming environments that can be shared with future environment designers?

Submission

We invite two kinds of submissions:

- A 1 to 3 page position statement describing an idea or research question related to the design, teaching, or study of blocks programming environments.

- A paper (up to 6 pages) describing previously unpublished results involving the design, study, or pedagogy of blocks programming environments.

All submissions must be made as PDF files to the Easy Chair Blocks and Beyond workshop submission site (https://easychair.org/conferences/?conf=blocksbeyond2015). Because this workshop will be discussion-based, rather than a mini-conference, the number of presentation/demonstration slots are limited. Authors for whom presentation or demonstration is essential should indicate this in their submission.

Important Dates

- 24 Jul. 2014: Submissions due.

- 14 Aug. 2015: Author notification.

- 4 Sep. 2015: Camera ready copies due.

- 22 Oct. 2015: Workshop in Atlanta.

Organizers

- Franklyn Turbak (chair), Wellesley College

- David Bau, Google

- Jeff Gray, University of Alabama

- Caitlin Kelleher, Washington University, St. Louis

- Josh Sheldon, MIT

Program Committee

- Neil Brown, University of Kent

- Dave Culyba, Carnegie Mellon University

- Sayamindu Dasgupta, MIT

- Deborah Fields, Utah State University

- Neil Fraser, Google

- Mark Friedman, Google

- Dan Garcia, University of California, Berkeley

- Benjamin Mako Hill, University of Washington

- Fred Martin, University of Massachusetts Lowell

- Paul Medlock-Walton, MIT

- Yoshiaki Matsuzawa, Aoyama Gakuin University

- Amon Millner, Olin College

- Ralph Morelli, Trinity College

- Brook Osborne, Code.org

- Jonathan Protzenko, Microsoft Research

- Ben Shapiro, Tufts University

- Wolfgang Slany, Graz University of Technology

- Daniel Wendel, MIT

Recent Comments