Posts tagged ‘NCWIT’

Ruthe Farmer’s important big idea: The Last Mile Education Fund to increase diversity in STEM

I met Ruthe Farmer (Wikipedia page) when she represented the Girl Scouts in the early days of the NSF Broadening Participation in Computing (BPC) alliances. She played a significant role in NCWIT. I had many opportunities to interact with her in her roles at NCWIT and CSforAll. Ruthe organized the White House summit with ECEP in 2016 (see blog post) when she was with the Office of Science and Technology Policy in the Obama administration. Her latest project may be the one that’s closest to my heart.

Ruthe has founded and is CEO of the Last Mile Education Fund. Their mission is:

The Last Mile Education Fund offers a disruptive approach to increasing diversity in tech and engineering fields by addressing critical gaps in financial support for low-income underrepresented students within four semesters of graduation.

I was still at Georgia Tech when I heard about the completion microgrant program at Georgia State. Georgia State was (and still is) making headlines for their use of big data to boost retention and get students graduated. Georgia State is the kind of institution where over half of their students are classified as low-income. There is a huge social benefit when GSU can improve their retention statistics. The completion grant program was started in 2011 and focuses on students who could graduate (e.g., their grades were fine), but they had run out of money before they finished. The grant program gave no more than $2,500 per student (Inside Higher Education article). Today, we know that the average grant has actually been $900. That’s a shockingly low cost for getting students the rest of the way to their college degree. It’s a great idea, and deserves to be applied more broadly than one university.

The Last Mile Education Fund especially focuses on getting students from diverse backgrounds into STEM careers. These last gaps in funding are among the barriers that keep girls out from STEM careers (where Ruthe’s focus was in the Girl Scouts and NCWIT) but also low-income students and people of color.

I was reminded to write about the Last Mile Education Fund by Alfred Thompson’s blog (see post here). He’s got a lot more information about the Last Mile Education Fund there.

I am a first generation graduate. My parents and I had no idea how to even apply to college. I am forever grateful that Wayne State University found me in my high school, guided me to applying, and gave me a scholarship to attend. I’m a privileged white guy. Not everybody gets the opportunities I had. It’s critical to extend the opportunity of a higher education degree to a broader and more diverse audience.

The Last Mile Education Fund is important for closing the gap for students from diverse backgrounds. I’m a monthly supporter, and I encourage you to consider giving, too.

Changing Computer Science Education to eliminate structural inequities and in response to a pandemic: Starting a Four Part Series

George Floyd’s tragic death has sparked a movement to learn about race and to eliminate structural inequities and racism. My email is flooded with letters and statements demanding change and recommending actions. These include a letter from Black scholars and other members of the ACM to the leadership of the ACM (see link here), the Black in Computing Open Letter and Call to Action (see link here, and the Hispanics in Computing supportive response link here). The letter about addressing institutional racism in the SIGCHI community from the Realizing that All Can be Equal (R.A.C.E) is powerful and enlightening (see link here).

I’m reading daily about race. I’m not an expert, or even particularly well-informed yet. One of the books I’m reading is Me and White Supremacy by Layla F. Saad (see Amazon link here) where the author warns against:

Using perfectionism to avoid doing the work and fearing using your voice or showing up for antiracism work until you know everything perfectly and can avoid being called out for making mistakes.

This post is the start of a four part series about what we should be changing in computing education towards eliminating structural inequities. We too often build computing education for the most privileged, for the majority demographic groups. It’s past time to support alternative pathways into computing. Even if you’re not driven by concerns about racial injustice, I ask you to take my proposals seriously because of the pandemic. We don’t know how to teach CS remotely at this enormous scale over the next year, and the least-privileged students will be hurt the most by this. We must CS teach differently so that we eliminate the gap between the most and least privileged of our students. Here’s what we need to do.

Learn about Race

Amber Solomon, a PhD student working with Betsy DiSalvo and me, reviewed my first two posts about race in CS Education (at Blog@CACM and here a few weeks ago). Amber has written on intersectionality in CS education, and is writing a dissertation about the role of embodied representations in CS education (see a post here about her most recent paper). She recommended more on learning about race:

- Whiteness as Property by Cheryl Harris (see link here). Harris, Andre Brock, and some race scholars, argue that to understand racism, you should understand whiteness, not Blackness.

- The Matter of Race in Histories of American Technology by Herzig (see link here). She has the clearest explanation of “race and/as technology” that I’ve read. And she also does a great job explaining why we can’t just say that race is a social construct.

Two videos:

- Repurposing Our Pedagogies: Abolitionist Teaching in a Global Pandemic (see YouTube link here).

- Data, AI, Public Health, Policing, the Pandemic, and Un-Making Carceral States (see YouTube link here). it’s about data, but they get into what it means to be racially Black, white, etc.; and Ruha says something super interesting “rather than collecting racial data, think about what it would mean to collect data on racism.”

Think about the words you use, like “Underrepresented Minority”

Tiffani Williams wrote a Blog@CACM post in June that made an important point about the term “underrepresented minority” (see link here). She argues that it’s a racist term and we should strike it from our language.

Reason #1: URM is racist language because it denies groups the right to name themselves.

Reason #2: URM is racist language because it blinds us to the differences in circumstances of members in the group.

Reason #3: URM is racist language because it implies a master-slave relationship between overrepresented majorities and underrepresented minorities.

In Me and White Supremacy, Saad uses BIPOC (Black, Indigenous, and People of Color), but points out that that is mostly a shorthand for “people lacking white privilege.” She argues, as does Williams, that the term BIPOC ignores the differences in experience between people in those groups. I am striving to be careful in my language and be thoughtful when I use terms like “BIPOC” and “underrepresented.”

Change Computing Departments

Amy Ko has made some strong and insightful posts in the last month about the injustice and exclusion in CS education (see Microsoft presentation slides here). She wrote a powerful post about why her undergraduate major in Information Technology at the University of Washington is racist (see link here). Obviously, her point is that it’s not just her program — certainly the vast majority of computing majors are racist in the ways that she describes. There are mechanisms that are better, like the lottery I described recently to reduce the bias in admission to the major. Amy’s points are inspiring this blog post series.

Manuel Pérez-Quiñones has started blogging, with posts on what CS departments should do to dismantle racism (see link here) and about why CS departments should create more student organizations to combat racism (see link here). His first post has a quote that inspires me:

First, it should come as no surprise that many things we assume to be fair, standard, or just plain normal in reality are not. Even our notion of “fair” has been constructed from a point of view that prioritizes fairness for certain groups. Not only is history written by the victors; laws, structures, and other pieces of society are developed by them too. To expect them to be fair or equitable is naive at best.

Chad Jenkins shared with me a video of his keynote from the RSS 2018 Conference where he suggests that CS departments need to change their research focus, too, to incorporate a value for equity and human values.

The Chair of our department’s Diversity, Equity, and Inclusion (DEI) Committee, Wes Weimer, is pushing for all Computer Science departments to be transparent about how they’re doing on their goals to make CS more diverse and equitable, and what their plans are. The Computer Science & Engineering division at the University of Michigan is serving as an example by making its annual DEI report publicly available here. In the comments, please share your department’s DEI report. Let’s follow Wes’s lead and makes this the common, annual practice.

Change how we teach Computing

Manuel’s point isn’t just about departments. We as individual teachers of computer science and computing make choices which we think are “fair, standard” but actually support and enforce structural inequities. We have to change how we teach. CS for All has published a statement on anti-racism and injustice (see link here) where they say:

We pledge to repeatedly speak out against our historical pedagogies and approaches to computer science instruction that are grounded and designed to weed out all but a small prerogative subset of the US population.

Chad Jenkins mailed me the statement from the University of Maryland’s CS department about their recommendations to improve diversity and inclusion. I loved this quote, which will be the theme for this series of posts:

Creating a task force within the Education Committee for a full review of the computer science curriculum to ensure that classes are structured such that students starting out with less computing background can succeed, as well as reorienting the department teaching culture towards a growth mindset

We currently teach computer science in ways that “weed out all but a small prerogative subset of the US population” (CS for All). We need to teach so that “students starting out with less computing background can succeed” (UMdCS). We teach in ways that assume a fixed mindset — we presume that some students have a “Geek Gene” and there’s nothing much that teaching can do to change that. We know the opposite — teaching can trump genetics.

Even if you don’t care about race or believe that we have created structural inequities in CS education, I ask you to change because of the pandemic. Teaching on-line will likely hurt our students with the least preparation (see post here). We have to teach differently this year when students will have fewer resources, and we are literally inventing our classes anew in remote forms. If we don’t teach differently, we will increase the gap between those more or less computing background.

While I am just learning about race, I have been studying for years how to teach computing to people with less computing background. This is what this series of posts is about. In the next three posts, I make concrete recommendations about how we should teach differently to reduce inequity. I hope that you are inspired by the desire to eliminate racial inequities, but if not, I trust that you will recognize the need to teach differently because of the pandemic.

First step: Stop using pseudocode on the AP CS Principles Exam

Here’s an example of a structural inequity that weeds out students with less computing background. The AP CS Principles exam (see website here) is meant to be agnostic about what programming language the students are taught, so the programming problems on the actual exam are given in a pseudocode — either text or block-based (randomly). There is no interpreter generally available for the pseudocode, so students learn one language (maybe Snap! or Scratch or MIT App Inventor) and answer questions in another one.

Advanced Placement (AP) classes are generally supposed to replicate the experience of introductory courses at College. AP CSP is supposed to map to a non-CS majors’ intro to computing course. How many of these teach in language X, but then ask students to take their final exam in language Y which they’ve never used?

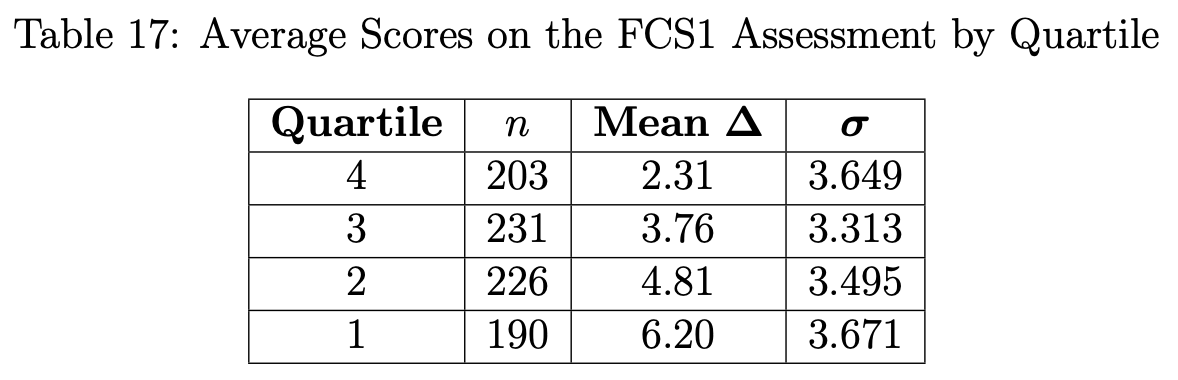

Allison Elliott Tew’s dissertation (see link here) is one of the only studies I know where students completed a validated instrument both in a pseudocode and in whatever language they learned (Java, MATLAB, and Python in her study). She found that the students who scored the best on the pseudocode exam had the closest match in scores between the pseudocode exam and the intro language exam — averaging a difference in 2.31 answers out of the 27 questions on the exams (see table below.). But the average difference increases dramatically. For the bottom two quartiles, the difference is 17% (4.8 questions out of 27) and 22% (6.2 questions out of 27). It’s not too difficult for students in the best-performing quartile to transfer their knowledge to the pseudocode, but it’s a significant challenge for the lowest-performing two quartiles. These results suggest predict that giving the AP CSP exam in a pseudocode is a barrier, which is easily handled by the most prepared students and is much more of a barrier for the least prepared students.

I went to a bunch of meetings around the AP CSP exam when it was first being set up. At a meeting where the pseudocode plans were announced, I raised the issue of Allison’s results. The response from the College Board was that, while it was a concern, it was not likely going to be a significant problem for the average student. That’s true, and I accepted it at the time. But now, we’re aware of the structural inequities that we have erected that “weed out all but a small prerogative subset of the US population” (CS for All). It’s not acceptable that switching to a pseudocode dramatically increases the odds that students in the bottom half fail the AP CSP exam, when they might have passed if they were given the language that they learned.

Further, studies of block-based and text languages in the context of AP CSP support the argument that students overall do better in a block-based language (see this post here as an example). Every text-based problem decreases the odds that female, Black, and Hispanic students will pass the exam (using the race labels the students use to self-identify on the exam). If the same problem was in a block-based language, they would likely do better.

Using pseudocode on the AP CSP exam is like a tax. Everyone has to manage a bit more difficulty by mapping to a new language they’ve never used. But it’s a regressive tax. It’s much more easily handled by the most privileged and most prepared students.

AP CSP is an important program that is making computing education available to students who otherwise might never access a CS course. We should grow this program. But we should scrap the AP CSP exam in its current form. I understand that the College Board and the creators of the AP CSP exam were aiming with the pseudocode, mixed-modality exam to create freedom for schools and teachers to teach with whatever the language and curriculum they wanted. However, we now know that that flexibility comes at a cost, and that cost is greater for students with less computing background, with less preparation, and who are female, Black, or Hispanic. This is structural inequity.

How to change undergraduate computing to engage and retain more women

My Blog@CACM post for this month talks about the Weston et al paper (from last week), and about a new report from the Reboot Representation coalition (see their site here). The report covers what the Tech industry is doing to close the gender gap in computing and “what works” (measured both empirically and from interviews with people running programs addressing gender issues).

I liked the emphasis in the report on redesigning the experience of college students (especially female) who are majoring in computing. Some of their emphases:

- Work with community colleges, too. Community colleges tend to be better with more diverse students, and it’s where about half of undergraduates start today. If you want to attract more diverse students, that’s where to start.

- They encourage companies to offer “significant cash awards” to colleges that are successful with diverse students. That’s a great idea — computer science departments are struggling to manage undergraduate enrollment these days, and incentives to keep an eye on diversity will likely have a big impact.

- Grow computer science teachers and professors. I appreciated that second emphasis. There’s a lot of push to grow K-12 CS teachers, and I think it’s working. But there’s not a similar push to grow higher education CS teachers. That’s going to be a chokepoint for growing more CS graduates.

The report is interesting — I recommend it.

Results from Longitudinal Study of Female Persistence in CS: AP CS matters, After-school programs and Internships do not

NCWIT has been tracking their Aspirations in Computing award applicants for several years. The Aspirations award is given to female students to recognize their success in computing. Tim Weston, Wendy DuBow, and Alexis Kaminsky have just published a paper in ACM TOCE (see link here) about their six year study with some 500 participants — and what they found led to persistence into CS in College. The results are fascinating and somewhat surprising — read all the way to the end of the abstract copied here:

While demand for computer science and information technology skills grows, the proportion of women entering computer science (CS) fields has declined. One critical juncture is the transition from high school to college. In our study, we examined factors predicting college persistence in computer science and technology related majors from data collected from female high school students. We fielded a survey that asked about students’ interest and confidence in computing as well as their intentions to learn programming, game design, or invent new technology. The survey also asked about perceived social support from friends and family for pursuing computing as well as experiences with computing, including the CS Advanced Placement (AP) exam, out-of-school time activities such as clubs, and internships. Multinomial regression was used to predict persistence in computing and tech majors in college. Programming during high school, taking the CS Advanced Placement exam, and participation in the Aspirations awards program were the best predictors of persistence three years after the high school survey in both CS and other technology-related majors. Participation in tech-related work, internships, or after-school programs was negatively associated with persistence, and involvement with computing sub-domains of game design and inventing new applications were not associated with persistence. Our results suggest that efforts to broaden participation in computing should emphasize education in computer programming.

There’s also an article at Forbes on the study which includes recommendations on what works for helping female students to persist in computing, informed by the study (see link here). I blogged on this article for CACM here.

That AP CS is linked to persistence is something we’ve seen before, in earlier studies without the size or length of this study. It’s nice to get that revisited here. I’ve not seen before that high school work experience, internships, and after-school programs did not work. The paper makes a particular emphasis on programming:

While we see some evidence for students’ involvement in computing diverging and stratifying after high school, it seems that involvement in general tech-related fields other than programming in high school does not transfer to entering and persisting in computer science in college for the girls in our sample. Understanding the centrality of programming is important to the field’s push to broaden participation in computing. (Italics in original.)

This is an important study for informing what we do in high school CS. Programming is front-and-center if we want girls to persist in computing. There are holes in the study. I keep thinking of factors that I wish that they’d explored, but they didn’t — nothing about whether the students did programming activities that were personally or socially meaningful, nothing about role models, and nothing about mentoring or tutoring. This paper makes a contribution in that we now know more than we did, but there’s still lots to figure out.

The gender imbalance in AI is greater than in CS overall, and that’s a big problem

My colleague, Rada Mihalcea, sent me a copy of a new (April 2019) report from the AI Now Institute on Discriminating Systems: Gender, Race, and Power in AI (see link here) which describes the diversity crisis in AI:

There is a diversity crisis in the AI sector across gender and race. Recent studies found only 18% of authors at leading AI conferences are women, and more than 80% of AI professors are men. This disparity is extreme in the AI industry: women comprise only 15% of AI research staff at Facebook and 10% at Google. There is no public data on trans workers or other gender minorities. For black workers, the picture is even worse. For example, only 2.5% of Google’s workforce is black, while Facebook and Microsoft are each at 4%. Given decades of concern and investment to redress this imbalance, the current state of the field is alarming.

Without a doubt, those percentages do not match the distribution of gender and ethnicity in the population at large. But we already know that participation in CS does not match the population. How do the AI distributions match the distribution of gender and ethnicity among CS researchers?

A sample to compare to is the latest graduates with CS PhDs. Take a look at the 2018 Taulbee Survey from the CRA (see link here). 19.3% of CS PhD’s went to women. That’s terrible gender diversity when compared to the population, and AI (at 10%, 15%, or 18%) is doing worse. Only 1.4% of new CS PhD’s were Black. From an ethnicity perspective, Google, Facebook, and Microsoft are doing surprisingly well.

The AI Now Institute report is concerned about intersectionality. “The overwhelming focus on ‘women in tech’ is too narrow and likely to privilege white women over others.” I heard this concern at the recent NCWIT Summit (see link here). The issues of women are not identical across ethnicities. The other direction of intersectionality is also a concern. My student, Amber Solomon, has published on how interventions for Black students in CS often focus on Black males: Not Just Black and Not Just a Woman: Black Women Belonging in Computing (see link here).

I had not seen previously a report on diversity in just one part of CS, and I’m glad to see it. AI (and particularly the sub-field of machine learning) is growing in importance. We know that having more diversity in the design team makes it more likely that a broader range of issues are considered in the design process. We also know that biased AI technologies are already being developed and deployed (see the Algorithmic Justice League). A new Brookings Institute Report identifies many of the biases and suggests ways of avoiding them (see report here). AI is one of the sub-fields of computer science where developing greater diversity is particularly important.

How to organize a state (summit): From ECEP and NCWIT

Soon after we started the Expanding Computing Education Pathways (ECEP) Alliance, we were asked: What should a state do first? If they want to improve CS Education, what are the steps?

We developed a four step model — you can see a three minute video on ECEP that includes the four step model here. It was evidence-based in the sense that, yup, we really saw states doing this. We had no causal evidence. I’m not sure that that’s possible in any kind of education public policy research.

One of those steps is “Organize.” Gather your allies. Have meetings where you CS Ed people rub elbows with the state public policymakers, like legislators and staffers in the Department of Education (or Department of Public Instruction, or whatever it’s called in your state).

A lot of states have had summits since then (see a list of some here). Now, working with the fabulous NCWIT team of communicators, graphic designers, and social scientists, ECEP has released a state summit toolkit. We can’t yet tell you how to organize a state. We can tell you how to organize a state summit.

From finding change agents to building a steering committee of diverse stakeholders, convenings play an important role in broadening participation in computing at the state level. ECEP and NCWIT have developed the State Summit Toolkit to assist leadership teams as they organize meetings, events, and summits focused on advancing K-16 computer science education.

African-Americans don’t want to play baseball, like women don’t want to code: Both claims are false

I listened to few of my podcasts this summer with our move, so I’m catching up on them now. I just heard one that gave me a whole new insight into Stuart Reges’s essay Why Women Don’t Code.

In Here’s Why You’re Not an Elite Athlete (see transcript here), they consider why:

In 1981, there was 18.7 percent black, African-American players in the major leagues. As of 2018, 7.8 percent.

Why was there such a precipitous drop? David Canton, a professor at Connecticut College, offers three explanations:

I look at these factors: deindustrialisation, mass incarceration, and suburbanization. With deindustrialisation — lack of tax base — we know there’s no funds to what? Construct and maintain ball fields. You see the rapid decline of the physical space in the Bronx, in Chicago, in these other urban areas, which leads to what? Lack of participation.

Suburbanization drew the tax base out of the cities. With fewer taxes in the cities, there were fewer funds to support ball fields and maintain baseball leagues.

The incarceration rates for African-American men is larger than for other demographic groups (see NCAA stats). Canton explains why that impacts participation in baseball:

I can imagine in 1980, if you were 18-year-old black man in L.A., Chicago, New York, all of a sudden, you’re getting locked up for nonviolent offenses. I’m going to assume that you played baseball. I’m arguing that those men — if you did a survey, and go to prison today, federal and state, I bet you a nice percentage of these guys played baseball. Now some were not old enough to have children. And the ones that did weren’t there to teach their son to play baseball, to volunteer in Little League because they were in jail for nonviolent offenses.

There is now a program called RBI, for Reviving Baseball in Inner cities, funded by Major League Baseball, to try to increase the participation in baseball by African-Americans and other under-served youth. There are RBI Academies in Los Angeles, New York, Kansas City, and St. Louis.

So, why are there so few African-Americans in baseball? One might assume that they just choose not to play baseball, just as how Stuart Reges decided that the lack of women in the Tech industry means that they don’t want to code.

I find the parallels between the two stories striking:

- Baseball used to be 18.7% African-American.

- Computer Science used to be 40% female.

- There have been and are great African-American baseball players. (In 1981, 22% of the All-Star game rosters, were African-American, according to Forbes.) There is no inherent reason why African-Americans can’t play baseball.

- There have been and are great female computer scientists. There is no inherent reason why women can’t code.

- Today, baseball is only 7.8% African-American.

- Today, computer science is only about 17% female (in undergraduate enrollment).

- There are structural and systemic reasons why there are fewer African-Americans in baseball, such as deindustrialization, suburbanization, and a disproportionate impact of incarceration on the African-American community. (Some commentators say that the whiteness of baseball runs much deeper.)

- There are structural and systemic reasons where there are fewer women in computer science. There are many others, like the thoughtful posts from Jen Mankoff and Ann Karlin, and the heartfelt personal blog post by Kasey Champion, who have listed these far better than I could.

- Major League Baseball recognizes the problem and has created RBI to address it.

- The Tech industry, NSF (e.g., through creation of NCWIT), and others recognize the problem and are working to address it. Damore and Reges are among those in Tech who are arguing that we shouldn’t be trying to address this problem, that there are differences between men and women, and that we’re unlikely to ever reach gender equity in Tech.

Maybe there are people pushing back on the RBI program in baseball, who believe that African-Americans have chosen not to play baseball. I haven’t seen or heard that.

If we accept that we ought to do something to get more African-Americans past the systemic barriers into baseball, isn’t it just as evident that we should do something to get more females into Computing?

The Backstory on Barbie the Robotics Engineer: What might that change?

Professor Casey Fiesler has a deep relationship with Barbie, that started with a feminist remix of a book. I blogged about the remix and Casey’s comments on Barbie the Game Designer in this post. Now, Casey has helped develop a new book “Code Camp with Barbie and Friends” and she wrote the introduction. She tells the backstory in this Medium blog post.

In her essay, Casey considers her relationship with Barbie growing up:

I’ve also thought a lot about my own journey through computing, and how I might have been influenced by greater representation of women in tech. I had a lot of Barbies when I was a kid. For me, dolls were a storytelling vehicle, and I constructed elaborate soap operas in which their roles changed constantly. Most of my Barbies dated MC Hammer because my best friend was a boy who wasn’t allowed to have “girl” dolls, and MC was way more interesting than Ken. I also wasn’t too concerned about what the box told me a Barbie was supposed to be; otherwise I’d have had to create stories about models and ballerinas and the occasional zookeeper or nurse. My creativity was never particularly constrained, but I can’t help but think that even just a nudge — a reminder that Barbie could be a computer programmer instead of a ballerina — would have influenced my own storytelling.

I’ve been thinking about how Barbie coding might influence girls’ future interest in Tech careers. I doubt that Barbie is a “role model” for many girls. Probably few girls want to grow up to be “like Barbie.” What a coding Barbie might do is to change the notion of “what’s acceptable” for girls.

In models of how students make choices in academia (e.g., Eccles’ expectancy-value theory) and how students get started in a field (e.g., Alexander’s Model of Domain Learning), the social context of the decision matters a lot. Students ask themselves “Do I want to do this activity and why?” and use social pressure and acceptance to decide what’s an appropriate class to take. If there are no visible girls coding, then there is no social pressure. There are no messages that programming is an acceptable behavior. A coding Barbie starts to change the answer to the question, “Can someone like me do this?”

Why Don’t Women Want to Code? Better question: Why don’t women choose CS more often?

Jen Mankoff (U. Washington faculty member, and Georgia Tech alumna) has written a thoughtful piece in response to the Stuart Reges blog post (which I talked about here), where she tells her own stories and reframes the question.

Foremost, I think this is the wrong question to be asking. As my colleague Anna Karlin argues, women and everyone else should code. In many careers that women choose, they will code. And very little of my time as an academic is spent actually coding, since I also write, mentor, teach, etc. In my opinion, a more relevant question is, “Why don’t women choose computer science more often?”

My answer is not to presume prejudice, by women (against computer science) or by computer scientists (against women). I would argue instead that the structural inequalities faced by women are dangerous to women’s choice precisely because they are subtle and pervasive, and that they exist throughout a woman’s entire computer science career. Their insidious nature makes them hard to detect and correct.

Source: Why Don’t Women Want to Code? Ask Them! – Jennifer Mankoff – Medium

US National Science Foundation increases emphasis on broadening participation in computing

The computing directorate at the US National Science Foundation (CISE) has increased its emphasis on broadening participation in computing (BPC). (See quote below and FAQ here.) They had a pilot program where large research grants were required to include a plan to increase the participation of groups or populations underrepresented or under-served in computing. They are now expanding the program to include medium and large scale grants. The idea is to get more computing researchers nationwide focusing on BPC goals.

CISE recognizes that BPC requires an array of long-term, sustained efforts, and will require the participation of the entire community. Efforts to broaden participation must be action-oriented and must take advantage of multiple approaches to eliminate or overcome barriers. BPC depends on many factors, and involves changing culture throughout academia—within departments, classrooms, and research groups. This change begins with enhanced awareness of barriers to participation as well as remedies throughout the CISE community, including among principal investigators (PIs), students, and reviewers. BPC may therefore involve a wide range of activities, examples of which include participating in professional development opportunities aimed at providing more inclusive environments, joining various existing and future collective impact programs to helping develop and implement departmental BPC plans that build awareness, inclusion, and engagement, and conducting outreach to underrepresented groups at all levels (K-12, undergraduate, graduate, and postgraduate).

In 2017, CISE commenced a pilot effort to increase the community’s involvement in BPC, by requiring BPC plans to be included in proposals for certain large awards [notably proposals to the Expeditions in Computing program, plus Frontier proposals to the Cyber-Physical Systems and Secure and Trustworthy Cyberspace (SaTC) programs]. By expanding the pilot to require that Medium and Large projects in certain CISE programs [the core programs of the CISE Divisions of Computing and Communication Foundations (CCF), Computer and Network Systems (CNS), and Information and Intelligent Systems (IIS), plus the SaTC program] have approved plans in place at award time in 2019, CISE hopes to accomplish several things:

- Continue to signal the importance of and commitment to BPC;

- Stimulate the CISE community to take action; and

- Educate the CISE community about the many ways in which members of the community can contribute to BPC.

The long-term goal of this pilot is for all segments of the population to have clear paths and opportunities to contribute to computing and closely related disciplines.

Read more at https://www.nsf.gov/pubs/2018/nsf18101/nsf18101.jsp

Ever so slowly, diversity in computing jobs is improving: It’ll be equitable in a century

A great but sobering blog post from Code.org. Yes, computing is becoming more diverse, but at a disappointingly slow rate. Is it possible to go faster? Or is this just the pace at which we can change a field?

According to the Bureau of Labor Statistics, yes, but very slowly. We’ve analyzed the Current Population Survey data from the past few years to see how many people are employed in computing occupations, and the percentage of women, Black/African American, and Hispanic/Latino employees.

What did we find? There are about 5 million people employed in computing occupations, 24% of whom are women, and 15% of whom are Black/African American or Hispanic/Latino.

Since 2014, the trends in representation, although small, have been moving in the right direction — all three groups showed a tiny increase in representation. However, changes would need to accelerate significantly to reach meaningful societal balance in our lifetimes. If the current pace of increases continue, it would take over a century* until we saw balanced representation in computing careers.

Source: Is diversity in computing jobs improving? – Code.org – Medium

What can the Uber Gender Pay Gap Study tell us about improving diversity in computing?

The gig economy offers the ultimate flexibility to set your own hours. That’s why economists thought it would help eliminate the gender pay gap. A new study, using data from over a million Uber drivers, finds the story isn’t so simple.

Source: What Can Uber Teach Us About the Gender Pay Gap? – Freakonomics

A fascinating Freakonomics podcast tells us about why women are paid less than men (by about 7%) on Uber. They ruled out discrimination, after looking at a variety of sources. They found that they could explain all of that 7% from three factors.

They found that even in a labor market where discrimination can be ruled out, women still earn 7 percent less than men — in this case, roughly 20 dollars an hour versus 21. The difference is due to three factors: time and location of driving; driver experience; and average speed.

The first factor is that women choose to be Uber drivers in different places and at different times than men. Men are far more often to be drivers at 3 am on Saturday morning. The second factor is particularly interesting to me. Men tend to stick around on Uber longer than women, so they learn how to work the system. The third factor is that men drive faster, so they get more rides per hour.

When someone from Uber was asked about how they might respond to these results, he focused on the second factor.

But for example, you could imagine that if we make our software easier to use and we can steepen up the learning curve, then if people learn more quickly on the system, then that portion of the gap could be resolved via some kind of intervention. But that’s just an example. And we’re not there yet with our depth of understanding, to just simply write off the gender gap as a preference.

Improving learning might help shrink the gender pay gap. Obviously, I’m connecting this to computing education here. What role could computing education play in reducing gaps between males and females in computing? We have reason to believe that our inability to teach programming well led to the gender gap in computing. Could we make things better if we could teach computing well?

Here are two thoughts exploring that question.

- We know (e.g., from Unlocking the Clubhouse) that men tend to sink more time into programming, which can give them a lead in undergraduate education (what Jane Margolis has called ‘preparatory privilege‘). What if we could teach programming more efficiently? Could we close that gap? If we had a science of teaching programming, we could improve efficiency so that a few hours of focused effort in the classroom might lead to more effective learning of tens of hours of figuring out how to compile under Debian Linux.

- When I first started thinking about the “phonics of computing education” and our ebooks, I was inspired by work from Caroline Simard that suggested that helping female mid-level managers keep up their technical skills could help them to progress in the tech industry. Female mid-level managers have less time to invest in technical learning, and at the mid-level, technical education still matters. If you have a project that needs a new toolset, you’ll more likely give it to the manager who knows that toolset. If we could teach female mid-level technical managers more effectively and efficiently, could they make it into the C-suite of tech companies?

Maybe better computing education could be an important part of improving diversity, along multiple paths.

SIGCSE 2018 Preview: Black Women in CS, Rise Up 4 CS, Community College to University CS, and Gestures for Learning CS

While I’m not going to be at this year’s SIGCSE, we’re going to have a bunch of us there presenting cool stuff.

On Wednesday, Barb Ericson is going to this exciting workshop, CS Education Infrastructure for All: Interoperability for Tools and Data Analytics, organized by Cliff Shaffer, Peter Brusilovsky, Ken Koedinger, and Stephen Edwards. Barb is eager to talk about her adaptive Parsons Problems and our ebook work.

My PhD student, Amber Solomon, is presenting at RESPECT 2018 (see program here) on a paper with Dekita Moon, Amisha Roberts, and Juan Gilbert, Not Just Black and Not Just a Woman: Black Women Belonging in Computing. They talk about how expectations of being Black in CS and expectations as a woman in CS come into conflict for the authors.

On Thursday, Barb is presenting her paper (with Tom McKlin) Helping Underrepresented Students Succeed in AP CSA and Beyond, which are the amazing results from the alumni study from her Project Rise Up effort to help underrepresented students succeed at Advanced Placement CS A. When Barb was deciding on her dissertation topic, she considered making Rise Up her dissertation topic, or adaptive Parsons problems. She decided on the latter, so you might think about this paper as the dissertation final chapter if she had made Rise Up her dissertation focus. Project Rise Up grew from Barb’s interest in AP CS A and her careful, annual analysis of success rates in AP CS A for various demographics (here is her analysis for 2017). It had a strong impact (and was surprisingly inexpensive), as seen in the follow-on statistics and the quotes from the students now years after Rise Up. I recommend going to the talk — she has more than could fit into the paper.

On Friday, my PhD student, Katie Cunningham, is presenting with her colleagues from California State University Monterey Bay and Hartnell College, Upward Mobility for Underrepresented Students: A Model for a Cohort-Based Bachelor’s Degree in Computer Science. The full author list is Sathya Narayanan, Katie, Sonia Arteaga, William J. Welch, Leslie Maxwell, Zechariah Chawinga, and Bude Su. They’re presenting the “CSin3” program which drew in students from traditionally underrepresented groups and helped them earn CS degrees with remarkable success: A three year graduation rate of 71%, compared to a 22% four-year graduation rate, as well as job offers from selective tech companies. The paper describes the features of the program that made it so successful, like its multi-faceted support outside the classroom, the partnership between a community college and a university, and keeping a cohort model. The paper has been recognized with a SIGCSE 2018 Best Paper Award in the Curricula, Programs, Degrees, and Position Papers track.

On Friday, my colleague Betsy DiSalvo is going to present at the NSF Showcase some of the great work that she and her student, Kayla des Portes, have been doing with Maker Oriented Learning for Undergraduate CS.

On Saturday, my EarSketch colleagues are presenting their paper: Authenticity and Personal Creativity: How EarSketch Affects Student Persistence with Tom McKlin, Brian Magerko, Taneisha Lee, Dana Wanzer, Doug Edwards, and Jason Freeman.

Also on Saturday, Amber with her undergraduate researchers, Vedant Pradeep and Sara Li, are presenting a poster which is also a data collection activity, so I hope that many of you will stop by. Their poster is The Role of Gestures in Learning Computer Science. They are interested in how gesture can help with CS learning and might be an important evaluation tool — students who understand their code, tend to gesture differently when describing their code than students who have less understanding. They want to show attendees what they’ve seen, but more importantly, they want feedback on the gestures they’ve observed “in the wild.” Have you seen these? Have you seen other gestures that might be interesting and useful to Amber and her team? What other kinds of gestures do you use when explaining CS concepts? Please come by and help inform them about the gestures you see when teaching and learning CS.

Georgia Tech Launches Constellations Center Aimed at Equity in Computing

The Constellations Center was launched at a big event on December 11. I was there, to hear Executive Director Charles Isbell host the night, which included a great conversation with Senior Director Kamau Bobb (formerly of NSF).

Constellations is going to play a significant role in keeping a focus on broadening participation in computing in Georgia, and to serve as a national leader in making sure that everyone gets access to computing education.

Georgia Tech’s College of Computing has launched the Constellations Center for Equity in Computing with the goal of democratizing computer science education. The mission of the new center is to ensure that all students—especially students of color, women, and others underserved in K-12 and post-secondary institutions—have access to quality computer science education, a fundamental life skill in the 21st century.

Constellations is dedicated to challenging and improving the national computer science (CS) educational ecosystem through the provision of curricular content, educational policy assessment, and development of strategic institutional partnerships. According to Senior Director Kamau Bobb, democratizing computing requires a “real reckoning with the race and class divisions of contemporary American life.”

See more here.

How the Imagined “Rationality” of Engineering Is Hurting Diversity — and Engineering

Just a few weeks ago, Richard Thaler won the Nobel prize in Economics. Thaler is famous for showing that real human beings are not the wholly rational beings that Economic theory had previously assumed. It’s timely to consider where else we assume rationality, and where that rational assumption may lead us into flawed decisions and undesirable outcomes. The below article from Harvard Business Review considers how dangerous the Engineering “purity” argument is.

Just how common are the views on gender espoused in the memo that former Google engineer James Damore was recently fired for distributing on an internal company message board? The flap has women and men in tech — and elsewhere — wondering what their colleagues really think about diversity. Research we’ve conducted shows that while most people don’t share Damore’s views, male engineers are more likely to…

But our most interesting finding concerned engineering purity. “Merit is vastly more important than gender or race, and efforts to ‘balance’ gender and race diminish the overall quality of an organization by reducing collective merit of the personnel,” a male engineer commented in the survey. Note the undefended assumption that tapping the full talent pool of engineers rather than limiting hiring to a subgroup (white men) will decrease the quality of engineers hired. Damore’s memo echoes this view, decrying “hiring practices which can effectively lower the bar for ‘diversity’ candidates.”

Google and taxpayer money, Damore opines, “is spent to water only one side of the lawn.” Many male engineers in our survey agreed that women engineers are unfairly favored. “As regards gender bias, my workplace offers women more incentives and monetary support than it does to males,” commented one male engineer. Said another, women “will always be safe from a RIF [reduction in force]. As well as certain companies guaranteeing female engineers higher raises.”

Source: How the Imagined “Rationality” of Engineering Is Hurting Diversity — and Engineering

Recent Comments