Posts tagged ‘teachers’

Putting a Teaspoon of Programming into Other Subjects (May 2023 Communications of the ACM): About Teaspoon Languages

In May, my students and I published a paper in Communications of the ACM, “Putting a Teaspoon of Programming into Other Subjects” (see link here) about our work with teaspoon languages. (Submitted version, non-paywalled is here.) It’s a short Viewpoint, but we were able to squeeze into our 1800 word limit description of a couple of teaspoon languages, a definition of them, a description of our participatory design process for them, and some of the research questions we’re exploring with them, like what drives teacher adoption of teaspoon languages, use multilingual keywords to engage emerging bilingual students, and identifying challenges to even our simplified notions of programming.

My students helped me to be consistent with our language in this piece, which was so helpful. I’ve been talking about teaspoon languages for awhile, and my language has likely changed over that time. They’re challenging me to be more exact about what I mean.

For example, we use the phrase “teaspoon languages” and not “teaspoon programming languages.” The term “teaspoon” comes from the shorthand “TSP” for “Task-Specific Programming.” So, the “programming” bit is already in there. But in particular, I don’t want to generate the reaction, “But, hey, that doesn’t look like a real programming language…”

Programming languages are used to create software — preferably, software that is reliable, robust, safe, and secure. The programming languages research community works to make programming more effective for people who are using those languages to create software. Programming as an activity can also be used to solve problems and explore domains. We’re building languages for that latter purpose. Much of the programming that scientists and others do to solve problems and explore domains happens to be in programming languages that can also be used to create software (e.g., Python, R, Mathematica, MATLAB). Teaspoon languages (so far) can’t really be used to create software for someone else to execute. They’re not general. I don’t think any of the teaspoon languages that we have created are Turing complete. But teaspoon languages are used to define the behavior of a computational agent. It’s still programming.

Another question we hear quite a bit is “Isn’t this just a domain-specific language?” We tried to answer that in the piece. Yes, teaspoon languages are a kind of domain-specific language, but for a very small domain — a single task. The most critical part of teaspoon languages is “They can be used by students for a task that is useful to a teacher.” DSLs are so much bigger than teaspoon languages. Maybe we can use DSL tools one day to make teaspoon languages, but so-far, we’ve built unique user interfaces and unique languages for each one. The focus is on meeting the need now, and we’ll see if we ever get to generalizability and tools later.

The issues we study in our research with teaspoon languages don’t have much overlap with the programming languages research community. I don’t have good answers to questions like, “How do you support type safety?” or “Why can’t I define a lambda in Pixel Equations?” So, we’ll just call them “teaspoon languages” — and let the “programming” word be silent in there.

Participatory Design to Support University to High School Curricular Transition/Translation in FIE 2022

Here’s my second blog post on papers we presented during the first year of PCAS. Emma Dodoo is an Engineering Education Research PhD student working with me and co-advised with Lisa Lattuca. When she first started working with me, she wanted a project that supported STEM learning in high school. We happened upon this fascinating project which eventually led to an FIE 2022 paper (see link here).

The University of Michigan Marsal Family School of Education has a collaboration with the School at Marygrove in the Detroit Public Schools – Community District. The School at Marygrove requires all high school students to take a course in Engineering every year. The school is new, so when we came into the story, they were just starting to build an 11th grade Engineering curriculum. Where does an innovative K-12 school find curriculum for not-often-taught subjects like Engineering? It seemed natural to look to the partner university.

The University of Michigan has recently established a Robotics Department with an innovative undergraduate curriculum. The leadership in the U-M School of Education and the School at Marygrove decided to use some projects from the undergraduate curriculum for the 11th grade Engineering curriculum. Emma and I came in to run participatory design sessions to help with supporting the high school in adopting the university curriculum. We focused on one project in particular, where students would input data from a LIDAR sensor on a robot, then visualize the results. What we wanted to know was: What are the issues that come up when using a university curriculum to inform an innovative high school curriculum?

We started out with a set of interviews. We talked to undergraduates who had been in the Robotics curriculum and asked them: What was hard about the robotics projects? What were things that you wished you knew before you started? They gave us a list of issues, like the realization that equations on a plane could be used to define regions of a picture, that colors could be mapped to numbers and equations, and that pixels could be queried for their colors.

We talked to high school educators about what they wanted students to learn from the project. They were pretty worried about the mathematics required for the project, especially after the students had spent all of 10th grade on-line during the pandemic.

Emma took these objectives and concerns, and generated a set of possible activities to be used in the class. She used Desmos, Geogebra, and our new Pixel Equations teaspoon language. (I mentioned back in this blog post that we were using Pixel Equations in participatory design sessions — that’s when this study was happening.)

Pixel Equations was developed explicitly for the concerns that the undergraduates were raising and that the math educators cared about. Users specify the pixels they want to manipulate (leftmost column) by providing an equation on a plane or an equation based on the RGB channels in the color in the pixel. They specify the desired color changes in terms of the red, green, and blue channels (three columns on the right). The syntax for the boolean expressions and the equations for calculating colors is the same as what students would see in Java, C, or JavaScript. But there are no explicit loops, conditionals, or data — it’s a teaspoon language.

Emma ran participatory design sessions with stakeholders, including teachers from the School at Marygrove. Her goal was to identify the features that the stakeholders would find valuable, and in particular, to identify the concerns of the high school educators that may not be addressed in the university curriculum. She identified four sets of issues that were important to the stakeholders when transferring curriculum from the university to the high school:

- Prior Knowledge: The students knew Desmos. Using a tool they used before would help a lot when dealing with the new robotics concepts.

- Priming: The computing must be introduced so that there is time for the students to become familiar with it.

- Motivational Play: High school students need more opportunities to see the fun in an activity than undergraduate students.

- Self-efficacy: It’s important for students to feel that they can succeed at the activities.

That’s where the paper ends

All of this design and development happened during the pandemic. We didn’t hear much about what was going on in the high school, and we couldn’t visit. When we talked to our contacts at the School of Education, we found that they didn’t have much news either. It wasn’t until much later that we saw this news item. The 11th grade Engineering class actually didn’t do any of the mathematics activities that we’d helped with. Instead, the school got a grant for a bunch of robots, and the classes focused on directly programming the robots instead. It’s disappointing that they didn’t use any of the things that we worked on, but as I’ve been mentioning in this blog, we find that adoption is really hard. Other factors (like grants and the wow factor of programming a robot) can change priorities.

The paper is interesting for investigating stakeholder issues when transferring activities from university to high schools. Those are useful issues to know about, but even if you address all the issues, you still might not get adoption.

The information won’t just sink in: Helping teachers provide technology-assisted data literacy instruction in social studies

Last year, Tammy Shreiner and I published an article in the British Journal of Educational Technology, “The information won’t just sink in: Helping teachers provide technology-assisted data literacy instruction in social studies.” (I haven’t been able to blog much the last year while starting up PCAS, so please excuse my tardiness in sharing this story.) The journal version of the paper is here, and our final submitted version (not paywalled) is available here.

Tammy and I used this paper to describe what happened (mostly during the pandemic) as we continued to provide support to in-service/practicing social studies teachers to adopt data literacy instruction in their classes. Since this was a journal on educational technology, we mostly focused on two technologies:

- The OER Tammy created to support data literacy in social studies education — see link here.

- DV4L, the Data Visualization for Learning tool that we created explicitly for social studies teachers — see link here.

When we started collaborating together, we looked for a theoretical model could inform our work. The end goal was easy to describe: we wanted social studies teachers to teach data literacy. But it’s hard to measure progress towards that big, high-level goal. Teachers are teaching data literacy, or they’re not. How do you know if you’re getting closer to the goal? We structured our work and our evaluation around the Technology Acceptance Model (TAM). TAM suggests that adoption of a new technology boils down to two questions: (1) is the technology actually useful in solving a problem that users care about, and (2) is the technology usable by the users? Those were things that we could measure progress towards.

During the pandemic, we ran several on-line professional learning opportunities — a workshop where practicing teachers could try out the OER with some guidance (e.g., “Make sure you see this” and “Why don’t you try that?”), and kick the tires on a bunch of tools including DV4L. We gathered lots of data on those teachers, and Tammy did the hard work of analyzing those data over time. We made progress on TAM goals — our tools got more usable and more useful.

But we still got very little adoption. TAM didn’t work for us. Adoption didn’t increase as usability and usefulness increased.

Why not? That’s a really big question, and we barely touch on it in this paper. It’s now a couple of years since we wrote the BJET article, and I could now tick off a dozen bullet points of reasons why teachers do not adopt, despite a technology being both useful and usable. I’m not going to list them here, because there are other publications in the pipeline. Bahare Naimipour, the EER PhD student working on our project, is finishing a case study of some teachers who did adopt and how their beliefs about data literacy changed.

I can give you a big meta-reason which probably isn’t a surprise to most education researchers but might be a surprise to many computer scientists: It’s not all about (or even mostly about) the technology. I led the group that worked on DV4L, and I’ve been directing students who have been helping Tammy make the OER more usable and useful (including build new tools that we haven’t yet released). TAM matters, but the characteristics of the individual teachers and the context of the teacher’s classroom are critical factors that technology is unlikely to overcome.

This is work funded in part by our National Science Foundation grant, #DRL2030919

Getting feedback on Teaspoon Languages from CS educators and researchers at the Raspberry Pi Foundation seminar series

In May, I had the wonderful opportunity to speak at the Raspberry Pi Foundation Seminar series. I’ve attended some of these seminars before. I highly recommend them (see past seminars here). It’s a terrific format. The speaker presents for up to a half hour, then everyone gets put into a breakout room for small group discussions. The participants and speaker come back for 30-35 minutes of intensive Q&A — at least, it feels “intensive” from the speaker’s perspective. The questions you get have been vetted through the breakout room process. They’re insightful, and sometimes critical, but always in a constructive way. I was excited about this opportunity because I wanted to make it a hands-on session where the CS teachers and researchers who attended might actually use some Teaspoon Languages and give me feedback on them. I have rarely had the opportunity to work with CS teachers, so I was excited for the opportunity.

Sue Sentance wrote up a very nice blog post describing my talk (thank you!) — see here. The video of the talk and discussion is available. You can watch the whole thing, or, you can read the blog post then skip ahead to where the conversation takes place (around 26:00 in the video). If you have been wondering, “Why isn’t Mark just using Logo, Scratch, Snap, or NetLogo? We already have great tools! Why invent new languages that are clearly less powerful than what we already have?”, then you should jump to 34:38 and see Ken Kahn (inventor of ToonTalk) push me on this point.

The whole experience was terrific for me, and I hope that it’s valuable for the viewer and attendees as well. The questions and comments indicated understanding and appreciation for what I’m trying to do, and the concerns and criticisms are valuable input for me and my team. Thanks to Sue, Diana Kirby, the Raspberry Pi Foundation, and all the attendees!

From Guided Exploration to Possible Adoption: Patterns of Pre-Service Social Studies Teacher Engagement with Programming and Non-Programming Based Learning Technology Tools

In October, Bahare Naimipour presented our paper ”From Guided Exploration to Possible Adoption: Patterns of Pre-Service Social Studies Teacher Engagement with Programming and Non-Programming Based Learning Technology Tools” (Naimipour, Guzdial, Shreiner, and Spencer, 2021) at the Society for Information Technology and Teacher Education (SITE) 2021 conference. (Draft of the paper is available here. Full paywall version available here.) This paper is the first one about our work with social studies teachers since we received NSF funding. It was also a report on our last face-to-face participatory design session (in March 2020) before the pandemic lockdown. And most importantly, it was our first session with our data visualization tool DV4L in the mix.

I have blogged about our participatory design sessions before (see Bahare’s FIE paper from last Fall). Basically, we set up a group of social studies teachers in pairs, then ask them to try out various visualization tools with activity sheets that we have created to scaffold their process. The goal is to get everyone to make a visualization successfully in less than 10 minutes, and leave time to explore or try one (or both) other tools. There is time for the pairs to persuade each other to (a) come try the cool tool they found or (b) avoid this tool because it’s too hard or not useful. The tools in this set were Vega-Lite (a declarative programming tool which our teachers have found complex but useful in the past), CODAP (a drag-and-drop visualization tool designed for middle and high school students), and our DV4L (a purpose-built visualization tool that makes code visible but not required).

The teachers saw value in having students build visualization themselves (e.g., “I think making your own data visualization allows for a deeper connection and understanding of the data.”) As we hoped, they teased out what they liked and disliked about the tools. Most of the teachers preferred DV4L over the other two tools, because of its simplicity. Critically, they felt that they were engaging with the inquiry and not the tool: “(With DV4L) I found myself asking questions connected to the data itself, rather than asking questions in order to figure out how to work the visual.”

That teachers found DV4L easier than Vega-Lite isn’t really surprising. We were pleased that teachers weren’t disappointed with DV4L’s more limited visualization capabilities. What was really surprising was that our teachers preferred DV4L to CODAP, and this has happened in successive in-service teacher participatory design sessions during the pandemic. CODAP is drag-and-drop, creates high-quality visualizations, and was designed explicitly for middle and high school students. A teacher in one of our in-service design sessions explained to me why she preferred DV4L to CODAP. “CODAP is really powerful, but it would take me at least three hours to get my students comfortable with it. Is it worth it?” Just how much visualization is any social studies teacher going to use? Again, too much focus on the tool gets in the way of the social studies inquiry.

Now you might be asking, “But Mark, do the students learn history with DV4L? And do they see and learn about computing?” Great questions — we’re not there yet. Here’s one of our big questions, after running several more participatory design sessions with teachers since the lockdown: Why aren’t teachers adopting DV4L in their classrooms? They tell us that they really like it. But nobody’s adopted yet. How do we go from “ooh, great tool!” to “and here’s my lesson plan, and we’ll use it next week”? That’s an active area of research for all of us right now.

How I’m lecturing during emergency remote teaching

Alfred Thompson (whom most of my readers already know) has a recent blog post requesting: Please blog about your emergency remote teaching (see post here). Alfred is right. We ought to be talking about what we’re doing and sharing our practices, so we get better at it. Reflecting and sharing our teaching practices is a terrific way to improve CS teaching, which Josh Tenenberg and Sally Fincher told us about in their Disciplinary Commons.

My CACM Blog Post this month is on our contingency plan that we created to give students an “out” in case they become ill or just can’t continue with the class — see post here. I encourage all my readers who are CS teachers to create such a contingency plan and make it explicit to your students.

I am writing to tell you what I’m doing in my lectures with my co-instructor Sai R. Gouravajhala. I can’t argue that this is a “best” practice. This stuff is hard. Eugene Wallingford has been blogging about his emergency remote teaching practice (see post here). The Chronicle of Higher Education recently ran an article about how difficult it is to teach via online video like Zoom or BlueJeans (see article here). We’re all being forced into this situation with little preparation. We just deal with it based on our goals for our teaching practice.

For me, keeping peer instruction was my top priority. I use the recommended peer instruction (PI) protocol from Eric Mazur’s group at Harvard, as was taught to me by Beth Simon, Leo Porter, and Cynthia Lee (see http://peerinstruction4cs.com/): I pose a question for everybody, then I encourage class discussion, then I pose the question again and ask for consensus answers. I use participation in that second question (typically gathered via app or clicker device) towards a participation grade in the class — not correct/incorrect, just participating.

My plan was to do all of this in a synchronous lecture with Google Forms, based on a great recommendation from Chinmay Kulkarni. I would have a Google Form that everyone answered, then I’d encourage discussion. Students are working on team projects, and we have a campus license for Microsoft Teams, so I encouraged students to set that up before lecture and discuss with their teams. On a second Google Form with the same question, I also collect their email addresses. I wrote a script to give them participation credit if I get their email address at least once during the class PI questions.

Then the day before my first lecture, I was convinced on Twitter by David Feldon and Justin Reich that I should provide an asynchronous option (see thread here). I know that I have students who are back home overseas and are not in my timezone. They need to be able to watch the video at another time. I now know that I have students with little Internet access. So, I do all the same things, but I record the lecture and I leave the Google Forms open for 24 hours after the last class. The links to the Google Forms are in the posted slides and in the recorded lectures. To fill out the PI questions for participation, they would have to at least look at that the lecture.

I’m so glad that I did. As I tweeted, I had 188 responses to the PI questions after the lectures ended. 24 hours later, I had 233 responses. About 20% of my students didn’t get the synchronous lecture, but still got some opportunity to learn through the asynchronous component. The numbers have been similar for every lecture since that first.

I lecture, but typically only for 10-15 minutes between questions. I have 4-5 questions in an 85 minute lecture. The questions take longer now. I can’t just move the lecture along when most of the students answer, as I could with clickers. I typically give the 130+ students 90 seconds to get the link entered and answer the question.

I have wondered if I should just go to a fully asynchronous lecture, so I asked my students via a PI question. 85% say that they want to see the lecturer in the video. They like that I can respond to chat and to answers in Google Forms. (I appreciate how Google Forms lets me see a summary of answers in real-time, so that I can respond to answers.) I’d love to have a real, synchronous give-and-take discussion, but my class is just too big. I typically get 130+ students synchronously participating in a lecture. It’s hard to have that many students participate in the chat, let alone see video streams for all of them.

We’re down to the last week of lecture, then we’ll have presentations of their final projects. They will prepare videos of their presentations, and receive peer comments. Each student has been assigned four teams to provide peer feedback on. Each team has a Google Doc to collect feedback on their project.

So, that’s my practice. In the comments, I’d welcome advice on improving the practice (though I do hope not to have to do this again anytime soon!), and your description of your practice. Let’s share.

Why don’t high schools teach CS: It’s the lack of teachers, but it’s way more than that (Miranda Parker’s dissertation)

Back in October, I posted here about Miranda Parker’s defense. Back then, I tried to summarize all of Miranda’s work as a doctoral student in one sentence:

Readers of this blog will know Miranda from her guest blog post on the Google-Gallup polls, her development of SCS1 as a replication of a multi-lingual and validated measure of CS1 knowledge, the study she did of teacher-student differences in using ebooks, and her work exploring the role of spatial reasoning to relate SES and CS performance (work that was part of her dissertation study).

That was a seriously run-on sentence for an impressive body of work.

On Friday, December 13, I had the great honor of placing an academic hood on Dr. Parker*.

I haven’t really talked too much about Miranda’s dissertation findings yet. I really want to, but I also don’t want to steal the thunder from her future publications. So, with her permission, I’m going to just summarize some of my favorite parts.

First, Miranda built several regression models to explain Georgia high schools teaching computer science in 2016. I predicted way back at her proposal that the biggest factor would be wealth — wealthy schools would teach CS and poorer schools wouldn’t. I was wrong. Yes, that’s a statistically significant factor, but it doesn’t explain much.

The biggest factor is…teaching computer science in 2015. Schools that taught CS in 2015 were many times more likely to be teaching in 2016. Schools have to get started! Hadi Partovi made this argument to me once, that getting started in schools was the biggest part of the battle. Miranda’s model supports his argument. There’s much more to the story, but that’s the biggest takeaway for me on the quantitative analysis.

But her model only explains a bit over 50% of the variance. What explains the rest? We figured that there would be many different factors. To make it manageable, Miranda chose just four high schools to study as case studies where she did multiple interviews. All four of the schools were predicted by her best model to teach computer science, but none of the did.

Yes, as you’d expect, access to a teacher is the biggest factor, but it’s not as simple as just deciding to hire a CS teacher or train an existing teacher to teach CS. For example, one principal laid out for Miranda who could teach CS and who would take their class, and how to fill that gap in the schedule, and so on. In the end, he had a choice of offering choir or offering CS. There were students in choir. CS was a gamble. It wasn’t even a hard decision for that principal.

Here is the story that most surprised me. At two of the schools Miranda studied, they teach lots of cyber security classes, but no computer science. As you would expect, there was a good bit of CS content in the cybersecurity classes. They had the teachers. Why not then teach CS, too?

Because both of these schools were near Fort Gordon which has a huge cybersecurity district. Cybersecurity is a community value. It’s a sure thing when it comes to getting a job. What’s “computer science” in comparison?

In my opinion, there is nothing like Miranda’s study in the whole world. There are the terrific Roehampton reports that give us a quantitative picture about CS in all of England. There are great qualitative studies like Stuck in the Shallow End that tell us what’s going on in high schools. Miranda did both and connected them — the large scale quantitative analysis, and then used that to pick four interesting high schools to dig into for qualitative analysis. It’s a story specific to Georgia, and each US state is going to be a different story. But it’s a whole state, the right level of analysis in the US. It’s a fascinating story, and I’m proud that she pulled it off.

Keep an eye out for her publications about this work over the next couple years.

* By the way, Dr. Parker is currently on the academic job market.

What’s generally good for you vs what meets a need: Balancing explicit instruction vs problem/project-based learning in computer science classes

Lauren Margulieux has posted another of her exceptionally interesting journal article summaries (see post here). Her post summarizes recent article asking which is more effective: Direct instruction or learning through problem-solving-first (like in project-based learning or problem-based learning — or just about any introductory computer science course in any school anywhere)? Direct instruction won by a wide margin.

Lauren points out that there are lots of conditions when problem-solving-first might make sense. In more advanced classes where students have lots of expertise, we should use a different teaching strategy than what we use in introductory classes. When the subject matter isn’t cognitively complex (e.g., memorizing vocabulary words), there is advantages to having the students try to figure it out themselves first. Neither of these conditions are true for introductory computer science.

This is an on-going discussion in computing education. Felienne Hermans had a keynote at the 2019 RStudio Conference where she made an argument for explicit direct instruction (see link here). I made an argument for direct instruction in Blog@CACM last November (see post here). Back in 2017, I recommended balancing direct instruction and projects (see post here), because projects are clearly more motivating and authentic for computer science students, while the literature suggest that direct instruction leads to better learning — even of problem-solving skills.

Lately, I’ve been thinking about this question with a health metaphor. Let me try it here:

Everybody should exercise, right? Exercise provides a wide variety of benefits (listed in a fascinating blog post from Freakonomics from this June), including cardiovascular improvements, better aging, better sleep, and less stress. But if you have a heart problem, you’re going to get treatment for that, right? If you’re having high cholesterol, you should continue to exercise (or even increase it), but you might also be prescribed a statin. If you have a specific need (like a vitamin deficiency), you address that need.

Students in computing should work on projects. It’s authentic, it’s motivating, and there are likely a wide range of benefits. But if you want to gain specific skills, e.g., you want to achieve learning objectives, teach those directly. Don’t just assign a big project and hope that they learn the right things there. If you want to see specific improvement in specific areas, teach those. So sure, assign projects — but in balance. Meet the students’ needs AND give them opportunities to practice project skills.

And when you teach explicitly: Always, ALWAYS, ALWAYS use active learning techniques like peer instruction. It’s simply unethical to lecture without active learning.

How the Cheesecake Factory is like Healthcare and CS Education

Stephen Dubner of the Freakonomics Podcast did an interview with Atul Gawande, author of the Checklist Manifesto. Atul is a proponent of a methodical process — from a behavioral economist perspective, not from an optimization perspective. People make mistakes, and if we’re methodical (like the use of checklists), we’re less likely to make those mistakes. If we can turn our process into a “recipe,” then we will make fewer mistakes and have more reliable results. Here’s a segment of the interview where he argues for that process in healthcare.

DUBNER: Okay, what’s the difference between a typical healthcare system and say, a restaurant chain like the Cheesecake Factory?

GAWANDE: You’re referring to the article I wrote about the Cheesecake Factory…Basically what I was talking about was the idea that, here’s this restaurant chain. And yes, it’s highly caloric, but the Cheesecake Factorys here have as much business as a medium-sized hospital — $100 million in business a year. And they would cook to order every meal people had. And in order to make that happen, they have to run a whole process that they have real cooks, but then they have managers.

I was talking to one of the managers there about how he would make healthcare work. And his answer was, “Here’s what I would do, but of course you guys do this. I would look to see what the best people are doing. I would find a way to turn that into a recipe, make sure everybody else is doing it, and then see how far we improve and try learning again from that.” He said, “You do that, right?” And we don’t. We don’t do that.

Here’s where Gawande’s approach ties to education. Later in the interview:

DUBNER: And do you ever in the middle of, let’s say, a surgery think about, “Oh, here’s what I will be writing about this day?”

GAWANDE: You know, I don’t really; I’m in the flow. One of the things that I love about surgery is: it is, I have to confess, the least stressful thing I do, because at this point I’ve done thousands of the operations I do. Ninety-seven to 98 percent go pretty much as expected, and the 2 percent that don’t, I know the 10 different ways that are most common that they’ll go wrong and I have approaches to it. So it’s kind of freeing in a certain way.

I think about teaching like this. I have been teaching computing since 1980. I actively seek out new teaching methods. Teaching CS is fun for me — because I know lots of ways to do it, I have choices which keeps it interesting. I have mentioned the fascinating work on measuring teacher PCK (Pedagogical Content Knowledge) by checking how accurately teachers can predict how students will get exam questions wrong. I know lots of ways students can get computer science wrong. I still get surprises, but because I’m familiar with how students can get things wrong, I have a starting place for supporting students in constructing stronger conceptions.

We can teach this. The model of expertise that Gawande describes is achievable for CS teachers. We can teach new CS teachers methods that they can choose from. We can teach them common student mental models of programming, how to diagnose weaker understandings, and ways to help them improve their mental models. We can make a checklist of what a CS teacher needs to know and be able to do.

Why high school teachers might avoid teaching CS: The role of industry

Fascinating blog post from Laura Larke that helps to answer the question: Why isn’t high school computing growing in England? The Roehampton Report (pre-release of the 2019 data available here) has tracked the state of computing education in England, which the authors describe as a “steep decline.” Laura starts her blog post with the provocative question “How does industry’s participation in the creation of education policy impact upon what happens in the classroom?” She describes teachers who aim to protect their students’ interests — giving them what they really need, and making judgments about where to allocate scarce classroom time.

What I found were teachers acting as gatekeepers to their respective classrooms, modifying or rejecting outright a curriculum that clashed with local, professional knowledge (Foucault, 1980) of what was best for their young students. Instead, they were teaching digital skills that they believed to be more relevant (such as e-safety, touch typing, word processing and search skills) than the computer-science-centric content of the national curriculum, as well as prioritising other subjects (such as English and maths, science, art, religious education) that they considered equally important and which competed for limited class time.

Do we see similar issues in US classrooms? It is certainly the case that the tech industry is painted in the press as driving the effort to provide CS for All. Adam Michlin shared this remarkable article on Facebook, “(Florida) Gov. DeSantis okay with substituting computer science over traditional math and science classes required for graduation.” Florida is promoting CS as a replacement for physics or pre-calculus in the high school curriculum.

“I took classes that I enjoyed…like physics. Other than trying to keep my kids from falling down the stairs in the Governor’s mansion I don’t know how much I deal with physics daily,” the governor said.

The article highlights the role of the tech industry in supporting this bill.

Several top state lawmakers attended as well as a representative from Code.org, a Seattle-based nonprofit that works to expand computer science in schools. Lobbyists representing Code.org in Tallahassee advocated for HB 7071, which includes computer science initiatives and other efforts. That’s the bill DeSantis is reviewing.

A Microsoft Corporation representative also attended the DeSantis event. Microsoft also had lobbyists in Tallahassee during the session, advocating for computer science and other issues.

The US and England have different cultures. Laura’s findings do not automatically map to the US. I’m particularly curious if US teachers are similarly more dubious about the value of CS curricula if it’s perceived as a tech industry ploy.

Computer Science Teachers as Provocateurs: All learning starts from a problem

One of the surprising benefits of working with social science educators (history and economics) has been new perspectives on my own teaching. I’ve studied education for several years, and have worked with science and mathematics education researchers in the past. It hadn’t occurred to me that history education is so different that it would give me a new way of looking at my own teaching.

Last week, I was in a research meeting with Bob Bain, a history and education professor here at U. Michigan. He was describing how historians understand knowledge and what historian’s practice looks like, and how that should be reflected in the history classroom.

He said that all learning in history starts from a problem. That gave me pause. What’s a “problem” in history?

Bob explained that he defines problem as John Dewey did, as something that disturbs the equilibrium. “Activities at the Dewey School arose from the child’s own interests and from the need to solve problems that aroused the child’s curiosity and that led to creative solutions.” We don’t think until our environment is disturbed, but that environment may just be in your own head.

We each have our own stories that we use to explain the world, and these make up our own personal equilibria. Maybe students have always been told that the American Civil War was about states’ rights, and then they read the Georgia Declaration of Secession. Maybe they’ve thought of Columbus as the explorer who discovered America, and then note that he wasn’t celebrated until 1792, 300 years after his arrival. Why wasn’t he celebrated earlier, and why him and at that time? A good history teacher sets up these conflicts, disequilibria, or problems. Bob says it can be easy enough to create, simply by showing two contrasting accounts of the same historical event.

Research in the learning sciences supports this definition of learning. Roger Schank talked about the importance of learning through “expectation failure.” You learn when you realize that you don’t know something:

The understanding cycle – expectation failure – explanation – reminding – generalization – is a natural one. No one teaches it to us. We are not taught to have goals, nor to attempt to develop plans to achieve those goals by adapting old plans from similar situations. We need not be taught this because the process is so basic to what comprises intelligence. Learning is a natural act.

In progressive education, we’re told that the teacher should be a “Guide on the Side, not the Sage on the Stage.” When Janet Kolodner was developing Learning By Design, she talked about the role of teacher as coach and orchestrator. Those were roles I was familiar with. Bob was describing a different role.

I challenged him explicitly, “You’re a provocateur. You create the problems in the students’ minds.” He agreed.

Bob got me thinking about the role of the teacher in the computer science class. We can sometimes be a guide, a coach, and orchestrator — when students are working away on some problem or project. But sometimes, we have to be the provocateur.

We should always start from a problem. In science education, this is easy. Kids naturally do wonder why the sky is blue, why sunsets are more red, why heat travels along metal but not wood, and why stars twinkle. In more advanced computer science, we can also start from questions that students’ already have. I’m taking a MOOC right now because it explains things I’ve wondered about.

But in introductory classes, students already use a computer without problems. They may not see enough of real computing to wonder about how it works. The teacher has to generate a problem, inculcate curiosity — be a provocateur.

We should only teach something when it solves a problem for the student. A lecture on variables and types should be motivated by a problem that the variables and types solve. A lecture on loops should happen when students need to do something so often that copy-pasting the code repeatedly won’t work. Saying “You’re going to need this later” is not motivation enough — that doesn’t match the cycle that Schank described as natural. Nobody remembers things they will need in the future. Learning results when you need new knowledge to resolve the current problem, disequilibria, or conflict.

Note: Computer science doesn’t teach problem-solving. Dewey’s and Schank’s point is that problem-solving is a natural way in which people learn. Learning to program still doesn’t teach problem-solving skills.

Using MOOCs for Computer Science Teacher Professional Development

When our ebook work was funded by IUSE, our budget was cut from what we proposed. Something had to be dropped from our plan of work. What we dropped was a comparison between ebooks and MOOCs. I had predicted that we could get better learning and higher completion rates from our ebooks than from our MOOCs. That’s the part that got dropped — we never did that comparison.

I’m glad now. It’s kind of a ridiculous comparison because it’s about the media, not particular instances. I’m absolutely positive that we could find a terrible ebook that led to much worse results than the absolutely best possible MOOC, even if my hypothesis is right about the average ebook and the average MOOC. The medium itself has strengths and weaknesses, but I don’t know how to experimentally compare two media.

I’m particularly glad since I wouldn’t want to go up against Carol Fletcher and her creative team who are finding ways to use MOOCs successfully for CS teacher PD. You can find their recent presentation “Comparing the Efficacy of Face to Face, MOOC, and Hybrid Computer Science Teacher Professional Development” on SlideShare:

Carol sent me a copy of the paper from the 2016″Learning with MOOCs” conference*. I’m quoting from the abstract below:

This research examines the effectiveness of three primary strategies for increasing the number of teachers who are CS certified in Texas to determine which strategies are most likely to assist non-CS teachers in becoming CS certified. The three strategies compared are face-to-face training, a MOOC, and a hybrid of both F2F and MOOC participation. From October 2015, to August of 2016, 727 in-service teachers who expressed an interest in becoming CS certified participated in one of these pathways. Researchers included variables such as educational background, teaching certifications, background in and motivation to learn computer science, and their connection to computer science through their employment or the community at large as covariates in the regression analysis. Findings indicate that the online only group was no less effective than the face-to-face only group in achieving certification success. Teachers that completed both the online and face-to-face experiences were significantly more likely to achieve certification. In addition, teachers with prior certification in mathematics, a STEM degree, or a graduate degree had greater odds of obtaining certification but prior certification in science or technology did not. Given the long-term lower costs and capacity to reach large numbers that online courses can deliver, these results indicate that investment in online teacher training directed at increasing the number of CS certified teachers may prove an effective mechanism for scaling up teacher certification in this high need area, particularly if paired with some opportunities for direct face-to-face support as well.

That they got comparable results from MOOC-based on-line and face-to-face is an achievement. It matches my expectations that a blended model with both would be more successful than just on-line.

Carol and team are offering a new on-line course for the Praxis test that several states use for CS teacher certification. You can find details about this course at https://utakeit.stemcenter.utexas.edu/foundations-cs-praxis-beta/.

* Fletcher, C., Monroe, W., Warner, J., Anthony, K. (2016, October). Comparing the Efficacy of Face-to-Face, MOOC, and Hybrid Computer Science Teacher Professional Development. Paper presented at the Learning with MOOCs Conference, Philadelphia, PA.

The Ground Truth of Computing Education: What Do You Know?

Earlier this month, I was a speaker at a terrific event at Cornell Tech To Code & Beyond: Thinking & Doing organized by Diane Levitt (see Tweet here). I spoke, and then was on a panel with Kelly Powers, Thea Charles, Aman Yadav, and Diane to discuss what is Computational Thinking.

One of the highlights of the day for me was listening to Margaret Honey, a legendary educational technology designer and researcher (see bio here). She is President and CEO of the New York Hall of Science. One of my favorite parts of her talk was a description of the apps that they’re building to get kids to notice and measure things in their world. I even love the URL for their tools — https://noticing.nysci.org/

At the event, Diane mentioned that she was working on a blog post about her “ground truth” — what she most believed about CS education. She shared it as a tweet right after the event. It’s lovely and deep — find it here.

A couple of my favorite of her points:

Students thrive when we teach at the intersection of rigor and joy. In computer science, it’s fun to play with the real thing. But sometimes we water it down until it’s too easy—and kids know it. Struggle itself will not turn kids away from computer science. They want relevant learning experiences that lead to building things that matter to them. “I can do hard things!” is one of the most powerful thoughts a student can have.

The biggest lever we have is the one we aren’t using enough yet: preservice education for new teachers. The sooner we start teaching computer science education alongside the teaching of math and reading, during teachers’ professional preparation programs, the sooner we get to scale. It’s expensive and time-consuming to continually retool our workforce. Eventually, if every teacher enters the classroom prepared to include computer science, every student will be prepared for the digital world in which they live. This is what we mean by equity: equal access for every student, regardless of geography, gender, income, ability, or, frankly, interest.

Sara Judd answered Diane’s post with one of her own — find it here. I really enjoy it because she sees computer science like I do. It’s not just about problem-solving, but also about making things and connecting to the world.

Programming makes things.

While programming for it’s own sake can be fun for some people, (me, for instance) generally when people are programming it is because there is a thing that needs to be made. These things can be expressive pieces of visual art or music. These things can be silly fun for fun’s sake. These things can revolutionize the world, they can make our lives easier. The important thing is, they are “things.” CS doesn’t exist in a vacuum. Therefore, classroom CS should not exist in a vacuum.

I encourage more of us to do this — to write down what we believe about CS education, then share the essays. It’s great to hear goals and perspectives, both to learn new ones and also to recognize that others share how we think about it. I particularly enjoy reading these from people with different life experiences. I have a privileged life as a University CS professor. Teachers in K-12 struggle with very different things. I’m so pleased when I find that we still have similar goals for and perspectives about CS education.

Do we know how to teach secure programming to K-12 students and end-user programmers?

I wrote my CACM Blog post this month on the terrific discussion that Shriram started in my recent post inspired by Annette Vee’s book (see original post here), “The ethical responsibilities of the student or end-user programmer.” I asked several others, besides the participants in the comment thread, about what responsibility they thought students and end-user programmers bore for their code.

One more issue to consider, which is more computing education-specific than the general issue in the CACM Blog. If we decided that K-12 students and end-user programmers need to know how to write secure programs, could we? Do we know how? We could tell students, “You’re responsible,” but that alone doesn’t do any good.

Simply teaching about security is unlikely to do much good. I wrote a blog post back in 2013 about the failings of financial literacy education (see post here) which is still useful to me when thinking about computing education. We can teach people not to make mistakes, or we can try to make it impossible to make mistakes. The latter tends to be more effective and cheaper than the former.

What would it take to get students to use best practices for writing secure programs and to test their programs for security vulnerabilities? In other words, how could you change the practice of K-12 student programmers and end-user programmers? This is a much harder problem than setting a learning objective like “Students should be able to sum all the elements in an array.” Security is a meta-learning objective. It’s about changing practice in all aspects of other learning objectives.

What it would take to get CS teachers to teach to improve security practices? Consider for example an idea generally accepted to be good practice: We could teach students to write and use unit tests. Will they when not required to? Will they write good unit tests and understand why they’re good? In most introductory courses for CS majors, students don’t write unit tests. That’s not because it’s not a good idea. It’s because we can’t convince all the CS teachers that it’s a good idea, so they don’t require it. How much harder will it be to teach K-12 CS teachers (or even science or mathematics teachers who might be integrating CS) to use unit tests — or to teach secure programming practices?

I have often wondered: Why don’t introductory students use debuggers, or use visualization tools effectively (see Juha Sorva’s excellent dissertation for a description of how student use visualizers)? My hypothesis is that debuggers and visualizers presume that the user has an adequate mental model of the notional machine. The debugging options Step In or Step Over only make sense if you have some understanding of what a function or method call does. If you don’t, then those options are completely foreign to you. You don’t use something that you don’t understand, at least, not when your goal is to develop your understanding.

Secure programming is similar. You can only write secure programs when you can envision alternative worlds where users type the wrong input, or are explicitly trying to break your program, or worse, are trying to do harm to your users (what security people sometimes call adversarial thinking). Most K-12 and end-user programmers are just trying to get their programs work in a perfect world. They simply don’t have a model of the world where any of those other things can happen. Writing secure programs is a meta-objective, and I don’t think we know how to achieve it for programmers other than professional software developers.

Analyzing CS in Texas school districts: Maybe enough to take root and grow

My Blog@CACM for this month is about Code.org’s decision to shift gradually the burden of paying for CS professional development to the local regions — see link here. It’s an important positive step that needs to happen to make CS sustainable with the other STEM disciplines in K-12 schools.

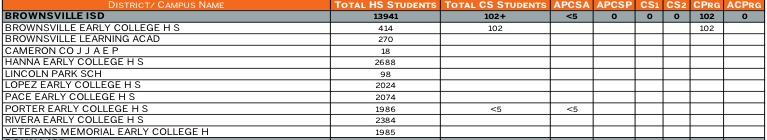

We’re at an interesting stage in CS education. 40-70% of high schools have CS, but the classes are pretty empty. I use Indiana and Texas as examples because they’ve made a lot of their data available. Let’s drill a bit into the Texas data to get a flavor of it, available here. I’m only going to look at Area 1’s data, because even just that is deep and fascinating.

Brownsville Intermediate School District. 13,941 students. 102 in CS.

Of the 10 high schools in Brownsville ISD, only two high schools have anyone in their CS classes. Brownsville Early College High School has 102 students in CS Programming (no AP CS Level A, no AP CSP). That probably means that one teacher has several sections of that course — that’s quite a bit. The other high school, Porter Early College High School has fewer than five students in AP CS A. My bet is that there is no CS teacher there, only five students doing an on-line class. That means for 10 high schools and 13K students, there is really only one high school CS teacher.

Edinburg Consolidated Independent School District, over 10K students, 92 students in CS.

This is a district that could grow CS if there was will. There are 6 high schools, but two are special cases: One with less than 5 students, and the other in a juvenile detention center. The other four high schools are huge, with over 2000 students each. In Economedes, that are only 9 students in AP CS A — maybe just on-line? Edinburg North and Robert R Vela high school each have two classes: AP CS A and CS1. With 21 and 14, I’m guessing two sections. The other has 43 and 6. That might be two sections of AP CS A and another of CS1, or two sections of AP CS A and 6 students in an on-line class. In any case, this suggests two high school CS teachers (maybe three) in half of the high schools in the district. Those teachers aren’t teaching only CS, but with increased demand and support from principals, the CS offerings could grow.

It’s fascinating to wander through the Texas data, to see what’s there and what’s not. I could be wrong about what’s there, e.g., maybe there’s only one teacher in Edinburg and she’s moving from school-to-school. Given these data, there’s unlikely to be a CS teacher in every high school, who just isn’t teaching any CS. These data are a great snapshot. There is CS in Texas high schools, and maybe there’s enough there to take root and grow.

Recent Comments