Posts tagged ‘transfer’

A Scaffolded Approach into Programming for Arts and Humanities Majors: ITiCSE 2023 Tips and Techniques Papers

I am presenting two “Tips and Techniques” papers at the ITiCSE 2023 conference in Turku, Finland on Tuesday July 11th. The papers are presenting the same scaffolded sequence of programming languages and activities, just in two different contexts. The complete slide deck in Powerpoint is here. (There’s a lot more in there than just the two talks, so it’s over 100 Mb.)

When I met with my advisors on our new PCAS courses (see previous blog post), one of the overarching messages was “Don’t scare them off!” Faculty told me that some of my arts and humanities students will be put off by mathematics and may have had negative experiences with (or perceptions of) programming. I was warned to start gently. I developed this pattern as a way of easing into programming, while showing the connections throughout.

The pattern is:

- Introduce computer representations, algorithms, and terms using a teaspoon language. We spend less than 10 minutes introducing the language, and 30-40 minutes total of class time (including student in-class activities). It’s about getting started at low-cost (in time and effort).

- Move to Snap! with custom blocks explicitly designed to be similar to the teaspoon language. We design the blocks to promote transfer, so that the language is similar (surface level terms) and the notional machine is similar. Students do homework assignments in Snap!.

- At the end of the unit, students use a Runestone ebook, with a chapter for each unit. The ebook chapter has (1) a Snap! program seen in class, (2) a Python or Processing program which does the same thing, and (3) multiple choice questions about the text program. These questions were inspired by discussions with Ethel Tshukudu and Felienne Hermans last summer at Dagstuhl where they gave me advice on how to promote transfer — I’m grateful for their expertise.

I always teach with peer instruction now (because of the many arguments for it), so steps 1 and 2 have lots of questions and activities for students throughout. These are in the talk slides.

Digital Image Filters

The first paper is “Scaffolding to Support Liberal Arts Students Learning to Program on Photographs” (submitted version of paper here). We use this unit in this course: COMPFOR 121: Computing for Creative Expression.

Step 1: The teaspoon language is Pixel Equations which I blogged about here. You can run it here.

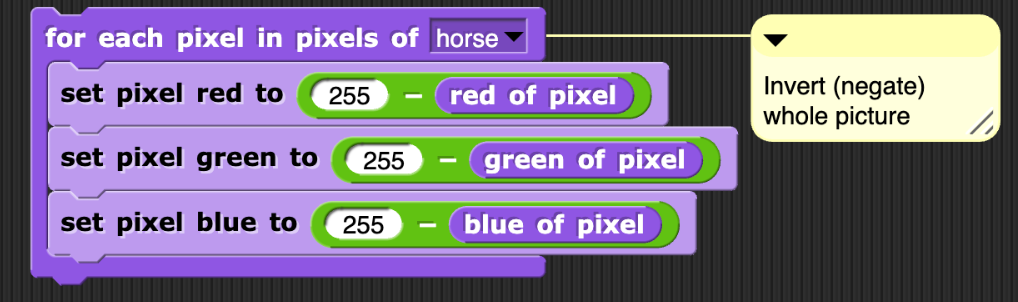

Students choose an image to manipulate as input, then specify their image filter by (a) writing a logical expression describing the pixels that they want to manipulate and (b) writing equations for how to compute the red, green, and blue channels for those pixels. Values for each channel are 0 to 255, and we talk about single byte values per channel. The equation for specifying the channel change can also reference the previous values of the channels, using the variables red, green, blue, rojo, verde, or azul.

Step 2:The latest version of the pixel microworld for Snap is available here. Click See Code to see all the examples — I leave lots of worked examples in the projects, as a starting point for homework and other projects.

Here’s what negation looks like:

Here’s an example of replacing a green background with the Alice character so that Alice is standing in front of a waterfall.

The homework assignment here involves creating their own image filters, then generate a collage of their own images (photos or drawn) in their original form and filtered.

Step 3:The Runestone ebook chapter on pixels is here.

Questions after the Python code include “Why do we have the for loop above?” And “What would happen if we changed all the 255 values to 120? (Yes, it’s totally fair to actually try it.)”

Recognizing and Generating Human Language

The second paper is “Scaffolding to Support Humanities Students Programming in a Human Language Context” (submitted version here). I originally developed this unit for this course COMPFOR 111: Computing’s Impact on Justice: From Text to the Web because we use chatbots early on in the course. But then, I added chatbots as an expressive medium to the Expression course, and we use parts of this unit in that course, too.

Step 1: I created a little teaspoon language for sentence recognition and generation — first time that I’ve created a teaspoon language with me as the teacher, because I needed one for my course context. The language is available here (you switch between recognition and generation from a link in the upper left corner).

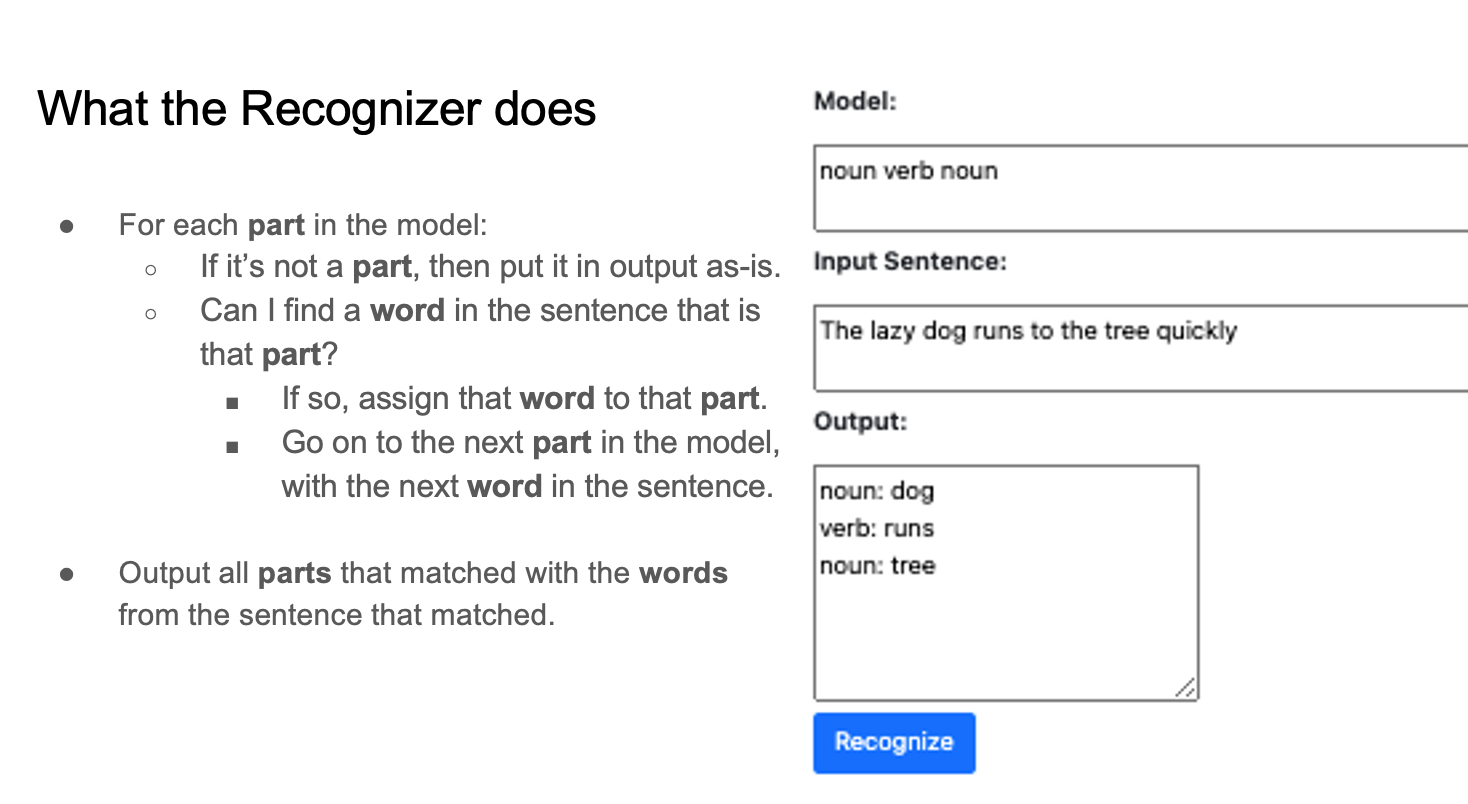

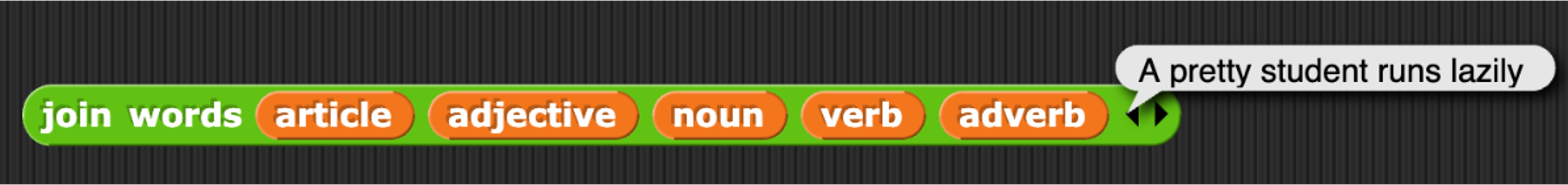

The program here is a sentence model. It can use five words: noun, verb, adverb, adjective, and article. Above the sentence model is the dictionary or lexicon. Sentence generation creates 10 random sentence from the model. Sentence recognition also takes an input sentence, then tries to match the elements in the model to the input sentence. I explain the recognition behavior like this:

This is very simple, but it’s enough to create opportunities to debug and question how things work.

- I give students sentences and models to try. Why is “The lazy dog runs to the student quickly” recognized as “noun verb noun” but “The lazy dog runs to the house quickly” not recognized? Because “house” isn’t in the original lexicon. As students add words to the lexicon, we can talk about program behavior being driven by both algorithm and data (which sets us up for talking about the importance of training data when creating ML systems later).

- I give them sentences in different English dialects and ask them to explore how to make models and lexicons that can match all the different forms.

- For generation, I ask them: Which leads to better generated sentences? Smaller models (”noun verb”) or larger models (“article adjective noun verb adverb”)? Does adding more words to the lexicon? Or tuning the words that are in the lexicon?

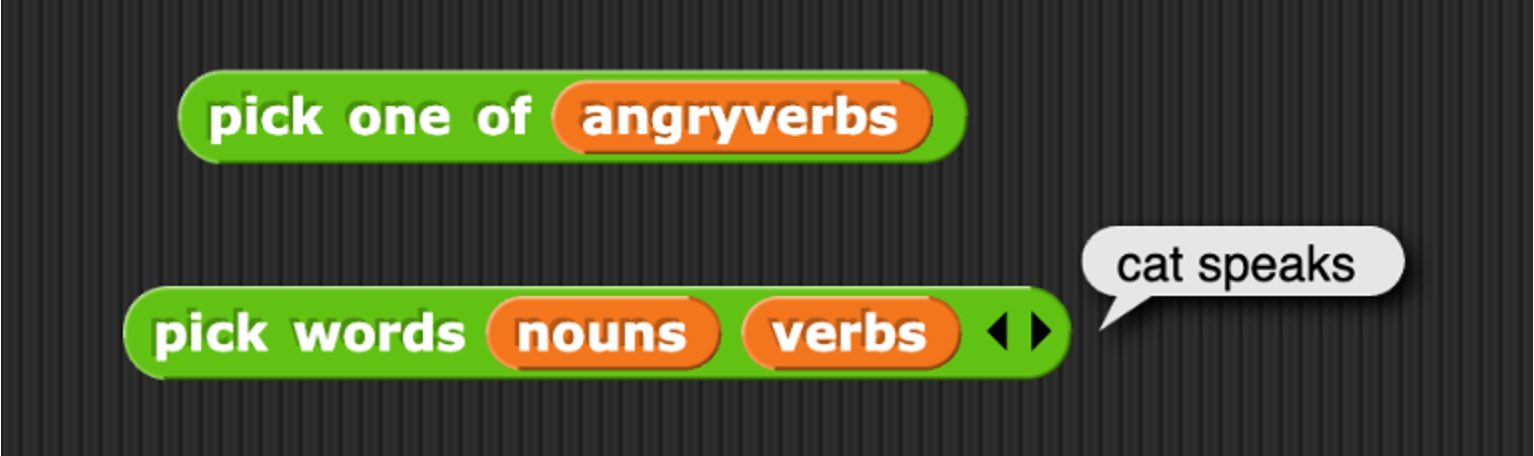

Step 2: Tamara Nelson-Fromm built the first set of blocks for language recognition and generation, and I’ve added to them since. These include blocks for language recognition.

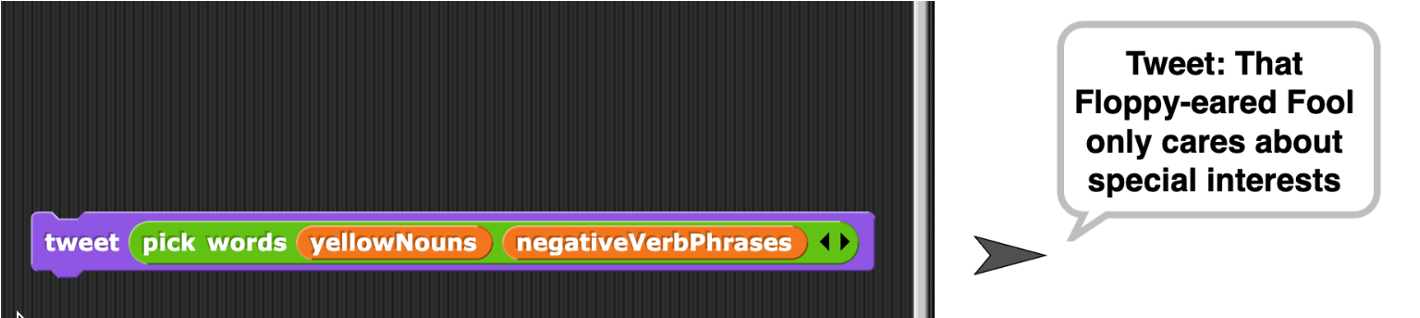

And language generation.

The examples in this section are fun. We create politically biased bots who tweet something negative about one party, listen for responses about their own party, then say something positive in retort about their party.

We create scripts that generate Dr. Seuss like rhymes.

The homework in this section is to generate haiku.

Step 3: The Runestone ebook chapter for this unit is here. The chapter starts out with the sentence generator, and then Snap! blocks that do the same thing, and then two different Python programs that do the same thing. We ask questions like “Which of the following is a sentence that could NOT be produced from the code above?” And “Let’s say that you want to make it possible for to generate ‘A curious boat floats.’ Which of the lines below do you NOT have to change?”

Where might this pattern be useful?

We don’t use this whole three-step pattern for every unit in these classes. We do something similar for chatbots, but that’s really it. Teaspoon languages in these classes are about getting started, to get past the “I like computers. I hate coding” stage (as described by Paulina Haduong in a paper I cite often). We use the latter two steps in the pattern more often — each class has an ebook with four or five chapters. The Snap to Python steps are about increasing the authenticity for the block-based programming and developing confidence that students can transfer their knowledge.

I developed this pattern to give non-STEM (arts and humanities) students a gradual, scaffolded approach to program, but it could be useful in other contexts:

- We originally developed teaspoon languages for integrating computing into other subjects. The first two steps in this process might be useful in non-CS classes to create a path into Snap programming.

- The latter two steps might be useful to promote transfer from block-based into textual programming.

There is transfer between programming and other subjects: Skills overlap, but it may not be causal

A 2018 paper by Ronny Scherer et al. “The cognitive benefits of learning computer programming: A meta-analysis of transfer effects” was making the rounds on Twitter. They looked at 105 studies and found that there was a measurable amount of transfer between programming and situations requiring mathematical skills and spatial reasoning. But here’s the critical bit — it may not be casual. We cannot predict that students learning programming will automatically get higher mathematics grades, for example. They make a distinction between near transfer (doing things that are very close to programming, like mathematics) and far transfer, which might include creative thinking or metacognition (e.g., planning):

Despite the increasing attention computer programming has received recently (Grover & Pea, 2013), programming skills do not transfer equally to different skills—a finding that Sala and Gobet (2017a) supported in other domains. The findings of our meta-analysis may support a similar reasoning: the more distinct the situations students are invited to transfer their skills to are from computer programming the more challenging the far transfer is. However, we notice that this evidence cannot be interpreted causally—alternative explanations for the existence of far transfer exist.

Here’s how I interpret their findings. Learning program involves learning a whole set of skills, some of which overlap with skills in other disciplines. Like, being able to evaluate an expression with variables, once you know the numeric value for those variables — you have to do that in programming and in mathematics. Those things transfer. Farther transfer depends on how much overlap there is. Certainly, you have to plan in programming, but not all of the sub-skills for the kinds of planning used in programming appear in every problem where you have to plan. The closer the problem is to programming, the more that there’s an overlap, and the more we see transfer.

This finding is like a recent paper out of Harvard (see link here) that shows that AP Calculus and AP CS both predict success in undergraduate computer science classes. Surprisingly, regular (not AP) calculus is also predictive of undergraduate CS success, but not regular CS. There are sub-skills in common between mathematics and programming, but the directionality is complicated.

We have known for a long time that we can teach programming in order to get a learning effect in other disciplines. That’s the heart of what Bootstrap does. Sharon Carver showed that many years ago. But that’s different than saying “Let’s teach programming, and see if there’s any effect in other classes.”

So yes, there is transfer between programming and other disciplines — not that it buys you much, and the effect is small. But we can no longer say that there is no transfer.

Measuring progress on CS learning trajectories at the earliest stages

I’ve written in this blog (and talked about many times) how I admire and build upon the work of Katie Rich, Diana Franklin, and colleagues in the Learning Trajectories for Everyday Computing project at the University of Chicago (see blog posts here and here). They define the sequence of concepts and goals that K-8 students need to be able to write programs consisting of sequential statements, to write programs that contain iteration, and to debug programs. While they ground their work in K-8 literature and empirical work, I believe that their trajectories apply to all students learning to program.

Here are some of the skills that appear in the early stages of their trajectories:

- Precision and completeness are important when writing instructions in advance.

- Different sets of instructions can produce the same outcome.

- Programs are made by assembling instructions from a limited set.

- Some tasks involve repeating actions.

- Programs use conditions to end loops.

- Outcomes can be used to decide whether or not there are errors.

- Reproducing a bug can help find and fix it.

- Step-by-step execution of instructions can help find and fix errors.

These feel fundamental and necessary — that you have to learn all of these to progress in programming. But it’s pretty clear that that’s not true. As I describe in my SIGCSE keynote talk (the relevant 4 minute segment is here), there is lots of valuable programming that doesn’t require all of these. For example, most students programming in Scratch don’t use conditions to end loops — still, millions of students find expressive power in Scratch. The Bootstrap: Algebra curriculum doesn’t have students write their own iteration at all — but they learn algebra, which means that there is learning power in even a subset of this list.

What I find most fascinating about this list is the evidence that CS students older than K-8 do not have all these concepts. One of my favorite papers at Koli Calling last year was It’s like computers speak a different language: Beginning Students’ Conceptions of Computer Science (see ACM DL link here — free downloads through June 30). They interviewed 14 University students about what they thought Computer Science was about. One of the explanations they labeled the “Interpreter.” Here’s an example quote exemplifying this perspective:

It’s like computers speak a different language. That’s how I always imagined it. Because I never understood exactly what was happening. I only saw what was happening. It’s like, for example, two people talking and suddenly one of them makes a somersault and the other doesn’t know why. And then I just learn the language to understand why he did the somersault. And so it was with the computers.

This student finds the behavior of computers difficult to understand. They just do somersaults, and computer science is about coming to understand why they do somersaults? This doesn’t convey to me the belief that outcomes are completely and deterministically specified by the program.

I’ll write in June about Katie Cunningham’s paper to appear next month at the International Conference of the Learning Sciences. The short form is that she asked Data Science students at University to trace through a program. Two students refused, saying that they never traced code. They did not believe that “Step-by-step execution of instructions can help find and fix errors.” And yet, they were successful data science students.

You may not agree that these two examples (the Koli paper and Katie’s work) demonstrate that some University students do not have all the early concepts listed above, but that possibility brings us to the question that I’m really interested in: How would we know?

How can we assess whether students have these early concepts in the trajectories for learning programming? Just writing programs isn’t enough.

- How often do we ask students to write the same thing two ways? Do students realize that this is possible?

- Students may realize that programming languages are “finicky” but may not realize that programming is about “precision and completeness.”

- Students re-run programs all the time (most often with no changes to the code in between!), but that’s not the same as seeing a value in reproducing a bug to help find and fix it. I have heard many students exclaim, “Okay, that bug went away — let’s turn it in.” (Or maybe that’s just a memory from when I said it as a student…)

These concepts really get at fundamental issues of transfer and plugged vs unplugged computing education. I bet that if students learn these concepts, they would transfer. They address what Roy Pea called “language-independent bugs” in programming. If a student understands these ideas about the nature of programs and programming, they will likely recognize that those are true in any programming language. That’s a testable hypothesis. Is it even possible to learn these concepts in unplugged forms? Will students believe you about the nature of programs and programming if they never program?

I find questions like these much more interesting than trying to assess computational thinking. We can’t agree on what computational thinking is. We can’t agree on the value of computational thinking. Programming is an important skill, and these are the concepts that lead to success in programming. Let’s figure out how to assess these.

Spreadsheets as an intuitive approach to variables: I don’t buy it

A piece in The Guardian (linked by Deepak Kumar on Facebook) described how Visicalc became so popular, and suggests that spreadsheets make variables “intuitive.” I don’t buy it. Yes, I believe that spreadsheets help students to understand that a value can change (which is what the quote below describes). I am not sure that spreadsheets help students to understand the implications of that change. In SBF (Structure, Behavior, Function) terms, spreadsheets make the structural aspect of variables visible — variables vary. They don’t make evident the behavior (how variables connect/influence to one another), and they don’t help students to understand function of the variable or the overall spreadsheet. If we think about the misconceptions that students have about variables, the varying characteristic is not the most challenging one.

The Bootstrap folks have some evidence that their approach to teaching variables in Racket helps students understand variables better in algebra. It would be interesting to explore the use of spreadsheets in a similar curriculum — could spreadsheets help with algebra, too? I don’t expect that we’d get the same results, in part because spreadsheet variables don’t look like algebra variables. Surface-level features matter a lot for novices.

Years ago, I began to wonder if the popularity of spreadsheets might be due to the fact that humans are genetically programmed to understand them. At the time, I was teaching mathematics to complete beginners, and finding that while they were fine with arithmetic, algebra completely eluded them. The moment one said “let x be the number of apples”, their eyes would glaze and one knew they were lost. But the same people had no problem entering a number into a spreadsheet cell labelled “Number of apples”, happily changing it at will and observing the ensuing results. In other words, they intuitively understood the concept of a variable.

Source: Why a simple spreadsheet spread like wildfire | Opinion | The Guardian

Brain training, like computational thinking, is unlikely to transfer to everyday problem-solving

In a recent blog post, I argued that problem-solving skills learned for solving problems in computational contexts (“computational thinking”) were unlikely to transfer to everyday situations (see post here). We see a similar pattern in the recent controversy about “brain training.” Yes, people get better at the particular exercises (e.g., people can learn to problem-solve better when programming). And they may still be better years later, which is great. That’s an indication of real learning. But they are unlikely to transfer that learning to non-exercise contexts. Most surprisingly, they are unlikely to transfer that learning even though they are convinced that they do. Just because you think you’re doing computational thinking doesn’t mean that you are.

Ten years later, tests showed that the subjects trained in processing speed and reasoning still outperformed the control group, though the people given memory training no longer did. And 60 percent of the trained participants, compared with 50 percent of the control group, said they had maintained or improved their ability to manage daily activities like shopping and finances. “They felt the training had made a difference,” said Dr. Rebok, who was a principal investigator.

So that’s far transfer — or is it? When the investigators administered tests that mimicked real-life activities, like managing medications, the differences between the trainees and the control group participants no longer reached statistical significance.

In subjects 18 to 30 years old, Dr. Redick also found limited transfer after computer training to improve working memory. Asked whether they thought they had improved, nearly all the participants said yes — and most had, on the training exercises themselves. They did no better, however, on tests of intelligence, multitasking and other cognitive abilities.

Source: F.T.C.’s Lumosity Penalty Doesn’t End Brain Training Debate – The New York Times

Little Evidence That Executive Function Interventions Boost Student Achievement: So why should computing?

Here’s how I interpret the results described below. Yes, having higher executive function (e.g., being able to postpone the gratification of eating a marshmallow) is correlated with greater achievement. Yes, we have had some success teaching some of these executive functions. But teaching these executive functions has not had any causal impact on achievement. The original correlations between executive function and achievement might have been because of other factors, like the kids who had higher executive function also had higher IQ or came from richer families.

This is relevant for us because the myth that “Computer science teaches you how to think” or “Computer science teaches problem-solving skills” is pervasive in our community. (See a screenshot of my Google search below, and consider this blog post of a few weeks ago.) But there is no support for that belief. If this study finds no evidence that explicitly teaching thinking skills leads to improved transferable achievement, then why should teaching computer science indirectly lead to improved thinking skills and transferable achievement to other fields?

Why do CS teachers insist that we teach for a given outcome (“thinking skills” or “problem-solving skills”) when we have no evidence that we’re achieving that outcome?

The meta-analysis, by researchers Robin Jacob of the University of Michigan and Julia Parkinson of the American Institutes for Research, analyzed 67 studies published over the past 25 years on the link between executive function and achievement. The authors critically assessed whether improvements in executive function skills—the skills related to thoughtful planning, use of memory and attention, and ability to control impulses and resist distractions—lead to increases in reading and math achievement , as measured by standardized test scores, among school-age children from preschool through high school. More than half of the studies identified by the authors were published after 2010, reflecting the rapid increase in interest in the topic in recent years.

While the authors found that previous research indicated a strong correlation between executive function and achievement, they found “surprisingly little evidence” that the two are causally related.

“There’s a lot of evidence that executive function and achievement are highly correlated with one another, but there is not yet a resounding body of evidence that indicates that if you changed executive functioning skills by intervening in schools, that it would then lead to an improvement in achievement in children,” said Jacob. “Although investing in executive function interventions has strong intuitive appeal, we should be wary of investing in these often expensive programs before we have a strong research base behind them.”

via Study: Little Evidence That Executive Function Interventions Boost Student Achievement.

Important paper at SIGCSE 2015: Transferring Skills at Solving Word Problems from Computing to Algebra Through Bootstrap

I was surprised that this paper didn’t get more attention at SIGCSE 2015. The Bootstrap folks are seeing evidence of transfer from the computing and programming activities into mathematics performance. There are caveats on the result, so these are only suggestive results at this time.

What I’d like to see in follow-up studies is more analysis of the students. The paper cited below describes the design of Bootstrap and why they predict impact on mathematics learning, and describes the pre-test/post-test evidence of impact on mathematics. When Sharon Carver showed impact of programming on problem-solving performance (mentioned here), she looked at what the students did — she showed that her predictions were met. Lauren Margulieux did think-aloud protocols to show that students were really saying subgoal labels to themselves when transferring knowledge (see subgoal labeling post). When Pea & Kurland looked for transfer, they found that students didn’t really learn CS well enough to expect anything to transfer — so we need to demonstrate that they learned the CS, too.

Most significant bit: Really cool that we have new work showing potential transfer from CS learning into other disciplines.

Many educators have tried to leverage computing or programming to help improve students’ achievement in mathematics. However, several hopes of performance gains—particularly in algebra—have come up short. In part, these efforts fail to align the computing and mathematical concepts at the level of detail typically required to achieve transfer of learning. This paper describes Bootstrap, an early-programming curriculum that is designed to teach key algebra topics as students build their own videogames. We discuss the curriculum, explain how it aligns with algebra, and present initial data showing student performance gains on standard algebra problems after completing Bootstrap.

via Transferring Skills at Solving Word Problems from Computing to Algebra Through Bootstrap.

National Academies Report Defines ’21st-Century Skills’

I looked up this report, expecting to see something about computation as a ’21st-century skill.’ The report is not what I expected, and probably more valuable than what I was looking for. Rather than focus on which content is most valuable (which leads us to issues like the current debate of whether we ought to teach algebra anymore), the panel emphasized “nonacademic skills,” e.g., the ability to manage your time so that you can graduate and intra-personal skills. I also appreciated how careful the panel was about transfer, mentioning that we do know how to teach for transfer within a domain, but not between domains.

Stanford University education professor Linda Darling-Hammond, who was not part of the report committee, said developing common definitions of 21st-century skills is critical to current education policy discussions, such as those going on around the Common Core State Standards. She was pleased with the report’s recommendation to focus more research and resources on nonacademic skills. “Those are the things that determine whether you make it through college, as much as your GPA or your skill level when you start college,” she said. “We have tended to de-emphasize those skills in an era in which we are focusing almost exclusively on testing, and a narrow area of testing.”

The skill that may be the trickiest to teach and test may be the one that underlies and connects skills in all three areas: a student’s ability to transfer and apply existing knowledge to a problem in a new context. “Transfer is the sort of Holy Grail in this whole thing,” Mr. Pellegrino said. “We’d like to believe we can create Renaissance men who are experts in a wide array of disciplines and can blithely transfer skills from one to the other, but it just doesn’t happen that way.”

via Education Week: Panel of Scholars Define ’21st-Century Skills’.

Any cognitive benefit of video games? Video-game studies have serious flaws

Do video games provide some kind of cognitive benefit after the game play? There have been arguments that video games lead to improved attention, quicker responses, and visual skills. A paper in Frontiers in Psychology has reviewed the past literature and found that they are all flawed with some basic bias errors. This doesn’t mean that video games don’t have cognitive benefits. But we don’t have any evidence that they do.

Most of the studies compare the cognitive performances of expert gamers with those of non-gamers, and suffer from well-known pitfalls of experimental design. The studies are not blinded: participants know that they have been recruited because they have gaming expertise, which can influence their performance, because they are motivated to do well and prove themselves. And the researchers know which participants are in which group, so they can have preconceptions that might inadvertently affect participants’ performance.

New NSF Program: Cyberlearning: Transforming Education

The second expected new NSF program that might fund computing education research has just been released. Very exciting! What a great time to do work in computing education research!

Through the Cyberlearning: Transforming Education program, NSF seeks to integrate advances in technology with advances in what is known about how people learn to

- better understand how people learn with technology and how technology can be used productively to help people learn, through individual use and/or through collaborations mediated by technology;

- better use technology for collecting, analyzing, sharing, and managing data to shed light on learning, promoting learning, and designing learning environments; and

- design new technologies for these purposes, and advance understanding of how to use those technologies and integrate them into learning environments so that their potential is fulfilled.

Of particular interest are technological advances that allow more personalized learning experiences, draw in and promote learning among those in populations not currently served well by current educational practices, allow access to learning resources anytime and anywhere, and provide new ways of assessing capabilities. It is expected that Cyberlearning research will shed light on how technology can enable new forms of educational practice and that broad implementation of its findings will result in a more actively-engaged and productive citizenry and workforce.

Teaching computer games as the next Latin

People still argue that learning Latin improves “critical thinking skills” and “comparative analysis skills.” Despite these claims, there is little evidence that spontaneous transfer occurs from general learning. Transfer is hard, requires lots of initial knowledge, and works best when students are explicitly taught to transfer. Explicitly, learning Latin does not lead to general thinking skills. Next up? Creating video games!

Computer games have a broad appeal that transcends gender, culture, age and socio-economic status. Now, computer scientists in the US think that creating computer games, rather than just playing them could boost students’ critical and creative thinking skills as well as broaden their participation in computing. They discuss details in the current issue of the International Journal of Social and Humanistic Computing.

Study Gauges Teach for America Graduates’ Civic Involvement – NYTimes.com

This is an interesting study that addresses an issue of concern here: transfer. In computing education, we worry a lot about transfer. “If I teach them with Alice or EToys, will they be able to use real languages later?” “If I teach with a context like media or robotics, will they be able to program in their career when they graduate?”

This study looked at transfer from Teach for America. Does Teach for America lead to enhanced civic involvement later? The answer is “Not really.” Some youth activism does transfer (like the Freedom Summer mentioned in the article), but that’s rare. Transfer is hard in all forms of education.

The reasons for the lower rates of civic involvement, Professor McAdam said, include not only exhaustion and burnout, but also disillusionment with Teach for America’s approach to the issue of educational inequity, among other factors.

The study, “Assessing the Long-Term Effects of Youth Service: The Puzzling Case of Teach for America,” is the first of its kind to explore what happens to participants after they leave the program. It was done at the suggestion of Wendy Kopp, Teach for America’s founder and president, who disagrees with the findings. Ms. Kopp had read an earlier study by Professor McAdam that found that participants in Freedom Summer — the 10 weeks in 1964 when civil rights advocates, many of them college students, went to Mississippi to register black voters — had become more politically active.

via Study Gauges Teach for America Graduates’ Civic Involvement – NYTimes.com.

Recent Comments